Introduction

When building a data warehouse (DWH) several key words or derivatives of them appear in requirements: secure, flexible, simple, scalable, reliable, performant, non-redundant, modern, automated, real-timed, etc. As it proves in practice, all these requirements are sometimes challenging to address with the increased complexity of the architecture chosen. There are so many technologies on the DWH market promising all these at low costs, low effort and high ROI, though DWH projects continue to fail addressing the business and technical requirements.

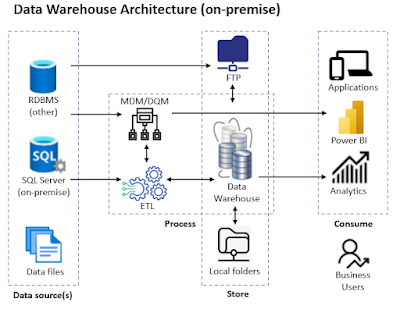

On a basic level for building a DWH is needed a data storage layer and an ETL (Extract, Transfer, Load) tool responsible for the data movement between the various source systems and DWH, and eventually within the DWH itself. After that, each technology added to the landscape tends to increase the overall complexity (and should be regarded with a critical eye in what concerns the advantages and disadvantages).

A Reference Architecture

When building a DWH or a data migration solution, which has many of the characteristics of a DWH, from the many designs, I prefer to keep things as simple as possible. An approach based on a performant database engine like SQL Server as storage layer and SSIS (SQL Server Integration Services) as ETL proved to be the best choice until now, allowing to address most of the technical requirements by design. Then come the choices on how and where to import and transform the data, at what level of granularity, on how the semantic layer is built, how the data are accessed, etc.

Being able to pull (see extract subprocess) the data from the data sources on a need by basis offers the most flexible approach, however there are cases in which the direct access to source data is not possible, having to rely on a push approach, where data are dumped regularly to a given location (e.g. FTP folder structure), following to be picked up as needed. It's actually a hybrid between a push and pull, because a fully push approach would mean pushing the data directly to the DWH, which can be also acceptable, though might offer lower control on data's movement and involve a few other challenges (e.g. permissions, concurrency).

Data can be prepared for the DWH in the source systems (e.g. exposed via data objects or API calls), anywhere in between via ETL-based transformations (see transform subprocess) or directly in the DWH. I prefer importing the data (see load subprocess) 1:1 without any transformations from the various sources via SSIS (or similar technologies) into a set of tables that designated the staging area. It's true that in this way the ETL technology is used to a minimum, though unless there's a major benefit to use it for data transformations, using DWH's capabilities and SQL for data processing can provide better performance and flexibility.

Besides the selection of the columns in scope (typically columns with meaningful values), it's important not to do any transformations in the extraction layer because the data is imported faster (eventually using fast load options as in SSIS) and it assures a basis for troubleshooting (as the data don't change between loads). Some filters can be applied only when the volume of data is high, and the subset of the data could be identified clearly (e.g. when data are partitioned based on a key like business unit, legal entity or creation date).

For better traceability, the staging schemas can reflect the systems they come from, the tables and the columns should have the same names, respectively same data types. On such tables no constraints are applied and no indexes are needed. They can be constructed however on the production tables (aka base tables) - copy of the tables from production.

Some DWH architects try replicating the constraints from the source systems and/or add more constraints on top to define the various business rules. Rigor is good in some scenarios, though it can involve a considerable effort and it might be challenging to keep over time, especially when considering the impact of big data on DWH architectures. Instead of using constraints, building a set of SQL scripts that pinpoint the issues as reports allow more flexibility with the risk of having inconsistencies running wild through the reports. The data should be cleaned in the source system and not possible then properly addressed in the DWH. Applying constraints will make the data unavailable for reporting until data are corrected, while being more permissive would allow dirty data. Thus, either case has advantages or disadvantages, though the latter seems to be more appropriate.

Indexes on the production schema should reflect the characteristics of the queries run on the data and shouldn't replicate the indexes from the source environments, even if some overlaps might exist. In practice, dropping the non-clustered indexes on the production tables before loading the data from staging, and recreating them afterwards proves to provide faster loading (see load optimization techniques).

The production tables are used for building a "semantic" data model or something similar. Several levels of views, table-valued functions and/or indexed/materialized views allows building the dimensions and facts tables, the latter incorporating the business logic needed by the reports. Upon case, stored-procedures, physical or temporary tables, table variables can be used to prepare the data, though they tend to break the "free" flow of data as steps in-between need to be run. On the other side, in certain scenarios their use is unavoidable.

The first level of views (aka base views) is based on the base tables without any joins, though they include only the fields in use (needed by the business) ordered and "grouped" together based on their importance or certain characteristics. The views can include conversions of data types, translations of codes into meaningful values, and quite seldom filters on the data. Based on these "base" views the second level is built, which attempts to define the dimension and fact tables at the lowest granularity. These views include joins between tables coming from the same or different systems, respectively mappings of values defined in tables, and whatever it takes to build such entities. However, transformations on individual fields are pushed, when possible, to the lower level to minimize logic redundancy. From similar reasons, the logic could be broken down over two or more "helper" views when visible benefits could be obtained from it (e.g troubleshooting, reuse, maintenance). It's important to balance between creating too many helper views and encapsulating too much logic in a view.

One of the design principles used in building the entities is to minimize the redundance of the fields used, ideally without having columns duplicated between entities at this level. This would facilitate the traceability of columns to the source tables within the "semantic" layer (typically in the detriment of a few more joins). In practice, one is forced to replicate some columns to simplify some parts of the logic.

Further views can be built based on the dimension and fact entities to define the logic needed by the reports. Only these objects are used and no direct reference to the "base" tables or views are made. Moreover, to offer better performance when the views can be materialized or, when there's an important benefit, physically saved as table (e.g. having multiple indexes for different scenarios). It's the case of entities with considerable data volume called over and over.

This approach of building the entities is usually flexible enough to address most of the reporting requirements, independently whether the technical solution has the characteristics of a DWH, data mart or data migration layer. Moreover, the overall architectural approach can be used on-premise as well in cloud architectures, where Azure SQL Server and ADF (Azure Data Factory) provide similar capabilities. Compared with standard SQL Server, some features might not be available, while other features might bring further benefits, though the gaps should be neglectable.

Data Management topics like Master Data Management (MDM), Data Quality Management (DQM) and/or Metadata Management can be addressed as well by using third-party tools or tools from the Microsoft stack - Master Data Services (MDS) and Data Quality Services (DQS) in combination with SSIS help addressing a wide range of scenarios - however these are optional.

Moving to the Cloud

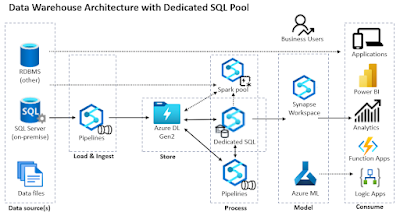

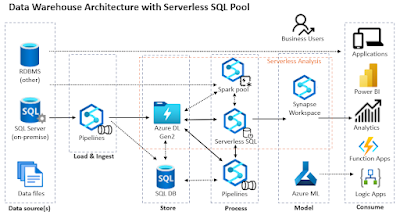

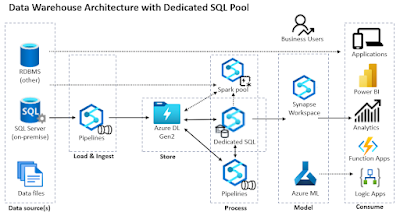

Within the context of big data, characterized by (high/variable) volume, value, variety, velocity, veracity, and further less important V's, the before technical requirements still apply, however within a cloud environment the overall architecture becomes more complex. Each component becomes a service. There are thus various services for data ingestion, storage, processing, sharing, collaboration, etc. The way data are processed involves also several important transformations: ETL becomes ELT, FTP and local storage by Data Lakes, data packages by data pipelines, stateful by stateless, SMP (Symmetric Multi-Processing) by MPP (Massive Parallel Processing), and so on.

As file storage is less expensive than database storage, there's an increasing trend of dumping business critical data into the Data Lake via data pipelines or features like Link to Data Lake or Export to Data Lake (*), which synchronize the data between source systems and Data Lake in near real-time at table or entity level. Either saved as csv, parquet, delta lake or any other standard file format, in single files or partitions, the data can be used directly or indirectly for analytics.

Cloud-native warehouses allow addressing topics like scalability, elasticity, fault-tolerance and performance by design, though further challenges appear as compute needs to be decoupled from storage, the workloads need to be estimated for assuring the performance, data may be distributed across data centers spanning geographies, the infrastructure is exposed to attacks, etc.

Azure Synapse

If one wants to take advantage of the MPP architecture's power, Microsoft provides an analytical architecture based on Azure Synapse, an analytics service that brings together data integration, enterprise DWH, and big data analytics. Besides two types of SQL-based data processing services (dedicated vs serverless SQL pools) it comes also with a Spark pool for in-memory cluster computing.

A DWH based on Azure Synapse is not that different from the reference architecture described above for an on-premise solution. Actually, a DWH based on a dedicated SQL pool (aka a physical data warehouse) involves the same steps mentioned above.

The data can be imported via ETL/ELT pipelines in the DWH, though there are also mechanisms for consuming the data directly from the files stored in the Data Lake or Azure storage. CETAS (aka Create External Table as Select) can be defined on top of the data files, the external tables acting as "staging" or "base" tables in the architecture described above. When using a dedicated SQL pool it makes sense to use the CETAS as "staging" tables, the processed data following to be dumped to "optimized" physical tables for consumption and refreshed periodically. However, when this happens the near real-time character of data is lost. Using the CETAs as base tables would keep this characteristic as long the data isn't saved physically in tables or files, maybe in the detriment of performance.

Using a dedicated SQL pool for direct reporting can become expensive as the pool needs to be available at least during business hours for incoming user requests, or at least for importing the data and refreshing the datasets. When using the CETAS as a base table, a serverless (aka on-demand) SQL pool, which uses a per-pay-use billing model could prove to be more cost-effective and flexible in many scenarios. By design, it helps to keep the near real-time character of the data. Moreover, even if the data are actually moved from the source tables into the Data Lake, this architecture has the characteristics of a logical data warehouse:

Unfortunately, unless one uses Spark tables,

misuses views or adds an Azure SQL database to the architecture, there are no physical tables or materialized views in a serverless SQL pool. There's still the option to use data pipelines for regullarly exporting intermediary data to files (incl. over partitions or folders), even if this involves more overhead as it's not possible to export data over SQL syntax to files more than once (though this might change in the future). For certain scenario it could be useful to store data in a Azure SQL Server or similar database, including a dedicated SQL pool.

Choosing between serverless and dedicated SQL pool is not an exclusive choice, both or all 3 types of pools (if we consider also the Spark pool) can be used in the architecture for addressing specific challenges, especially when we consider that there are important differences between the features available in each of the pools. Moreover, one can start the PoC based on the serverless SQL pool and when the solution became mature enough and used in all enterprise, parts of the logic or all of it can be migrated to a dedicated SQL pool. This would allow to save costs at the beginning in the detriment of further effort later.

Talking about the physical storage, data engineers recommend defining within a Data Lake several layers (aka regions, zones) labeled as bronze, silver and gold (and probably platinum will join the club anytime soon). The bronze layer refers to the raw data available in the Data Lake, including the files on which the initial CETAS are defined upon. The silver refers to transformed, cleaned, enriched and integrated data, data resulting from the second layer of views described above. The gold layer refers to the data to which business logic was applied and prepared for consumption, data resulting from the final layer of views. Of course, data pipelines can be used to prepare the data at these stages, though a view-based approach offers more flexibility, are easier to troubleshoot, manage and reuse than data pipelines.

Ideally the gold data should involve no or minimal further transformation before reaching the users, though that's not realistic. Building a DWH takes a considerable time and the business can't usually wait until everything is in place. Therefore, reports based on DWH will continue to coexist with reports directly accessing the source data, which will lead to controversies. Enforcing a single source of truth will help to minimize the gap, though will not eliminate it completely.

Closing Notes

These are just outlines of a minimal reference architecture. There's more to consider, as there are several alternatives (see [1] [2] [3] [4]) for each of the steps considered in here, each technology, new features or mechanisms opening new opportunities. The advantages and disadvantages should be always considered against the business needs and requirements. One approach, even if recommended, might not work for all, though unless there's an important requirement or an opportunity associated with an additional technology, deviating from reference architectures might not be such a good idea afterall.

Note:(*) Existing customers have until 1-Nov-2024 to transition from Export to Data lake to Synapse link. Microsoft advises new customers to use Synapse Link.

Resources:

[1] Microsoft Learn (2022) Modern data warehouse for small and medium business (

link)

[2] Microsoft Learn (2022) Data warehousing and analytics (

link)

[3] Microsoft Learn (2022) Enterprise business intelligence (

link)

[4] Microsoft Learn (2022) Serverless Modern Data Warehouse Sample using Azure Synapse Analytics and Power BI (

link)

[5] Coursera (2023) Data Warehousing with Microsoft Azure Synapse Analytics (

link) [course, free to audit]

[6] SQLBits (2020) Mahesh Balija's Building Modern Data Warehouse with Azure Synapse Analytics (

link)

[7] Matt How (2020) The Modern Data Warehouse in Azure: Building with Speed and Agility on Microsoft’s Cloud Platform (

Amazon)

[8] James Serra's blog (2022) Data lake architecture (

link)

[9] SQL Stijn (2022) SQL Building a Modern Lakehouse Data Warehouse with Azure Synapse Analytics: Moving your Database to the lake (

link)

[10] Solliance (2022) Azure Synapse Analytics Workshop 400 (

link) [GitHub repository]