|

| Software Engineering Series |

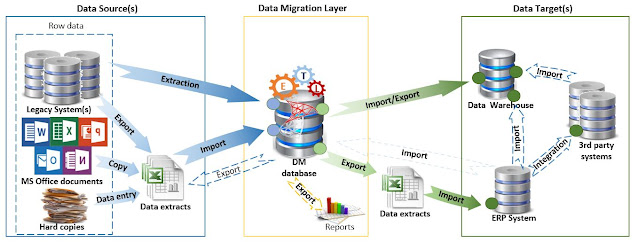

I worked for more than 20 years in various areas related to ERP systems - Data Migrations, Business Intelligence/Analytics, Data Warehousing, Data Management, Project Management, (data) integrations, Quality Assurance, and much more, having experience with IFS IV, Oracle e-Business Suite, MS Dynamics AX 2009 and during the past 3-7 years also with MS Dynamics 365 Finance, SCM & HR (in that order). Much earlier, I started to work with SQL Server (2000-2019), Oracle, and more recently with Azure Synapse and MS Fabric, writing over time more than 800 ad-hoc queries and reports for the various stakeholders, covering all the important areas, respectively many more queries for monitoring the various environments.

In the areas where I couldn’t acquire experience on the job, I tried to address this by learning in my free time. I did it because I take seriously my profession, and I want to know how (some) things work. I put thus a lot of time into trying to keep actual with what’s happening in the MS Fabric world, from Power BI to KQL, Python, dataflows, SQL databases and much more. These technologies are Microsoft’s bet, though at least from German’s market perspective, all bets are off! Probably, many companies are circumspect or need more time to react to the political and economic impulses, or probably some companies are already in bad shape.

Unfortunately, the political context has a broad impact on the economy, on what’s happening in the job market right now! However, the two aspects are not the only problem. Between candidates and jobs, the distance seems to grow, a dense wall of opinion being built, multiple layers based on presumptions filtering out voices that (still) matter! Does my experience matter or does it become obsolete like the technologies I used to work with? But I continued to learn, to keep actual… Or do I need to delete everything that reminds the old?

To succeed or at least be hired today one must fit a pattern that frankly doesn’t make sense! Yes, soft skills are important though not all of them are capable of compensating for the lack of technical skills! There seems to be a tendency to exaggerate some of the qualities associated with skills, or better said, of hiding behind big words. Sometimes it feels like a Shakespearian inaccurate adaptation of the stage on which we are merely players.

More likely, this lack of pragmatism will lead to suboptimal constructions that will tend to succumb under their own structure. All the inefficiencies need to be corrected, or somebody (or something) must be able to bear their weight. I saw this too often happening in ERP implementations! Big words don’t compensate for the lack of pragmatism, skills, knowledge, effort or management! For many organizations the answer to nowadays problems is more management, which occasionally might be the right approach, though this is not a universal solution for everything that crosses our path(s).

One of society’s answers to nowadays’ problem seems to be the refuge in AI. So, I wonder – where I’m going now? Jobless, without an acceptable perspective, with AI penetrating the markets and making probably many jobs obsolete. One must adapt, but adapt to what? AI is brainless even if it can mimic intelligence! Probably, it can do more in time to the degree that many more jobs will become obsolete (and I’m wondering what will happen to all those people).

Conversely, to some trends there will be probably other trends against them, however it’s challenging to depict in clear terms the future yet in making. Society seems to be at a crossroad, more important than mine.