Introduction

In the past, in the absence or in parallel with other techniques, aggregate functions proved to be quite useful in order to solve several types of problems that involve the retrieval of first/last record or the display of details together with averages and other aggregates. Typically their use involves two or more joins between a dataset and an aggregation based on the same dataset or a subset of it. An aggregation can involve one or more columns that make the object of analysis. Sometimes it might be needed multiple such aggregations based on different sets of columns. Each such aggregation involves at least a join. Such queries can become quite complex, though they were a price to pay in order to solve such problems.

Partitions

The introduction of analytic functions in Oracle and of window functions, a similar concept, in SQL Server, allowed the approach of such problems from a different simplified perspective. Central to this feature it’s the

partition (of a dataset), its meaning being same as of mathematical

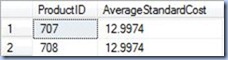

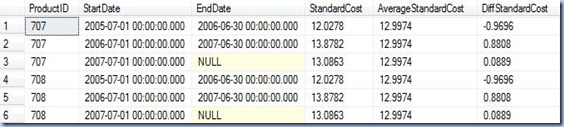

partition of a set, defined as a division of a set into non-overlapping and non-empty parts that cover the whole initial set. The introduction of partitions it’s not necessarily something new, as the columns used in a GROUP BY clause determines (implicitly) a partition in a dataset. The difference in analytic/window functions is that the partition is defined explicitly inline together with a ranking or average function evaluated within a partition. If the concept of partition is difficult to grasp, let’s look at the result-set based on two Products (the examples are based on AdventureWorks database):

-- Price Details for 2 Products

SELECT A.ProductID

, A.StartDate

, A.EndDate

, A.StandardCost

FROM [Production].[ProductCostHistory] A

WHERE A.ProductID IN (707, 708)

ORDER BY A.ProductID

, A.StartDate

In this case a partition is “created” based on the first Product (ProductId = 707), while a second partition is based on the second Product (ProductId = 708). As a parenthesis, another partitioning could be created based on ProductId and StartDate; considering that the two attributes are a key in the table, this will partition the dataset in partitions of 1 record (each partition will have exactly one record).

Details and Averages

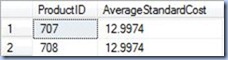

In order to exemplify the use of simple versus window aggregate functions, let’s consider a problem in which is needed to display Standard Price details together with the Average Standard Price for each ProductId. When a GROUP BY clause is applied in order to retrieve the Average Standard Cost, the query is written under the form:

-- Average Price for 2 Products

SELECT A.ProductID

, AVG(A.StandardCost) AverageStandardCost

FROM [Production].[ProductCostHistory] A

WHERE A.ProductID IN (707, 708)

GROUPBY A.ProductID

ORDERBY A.ProductID

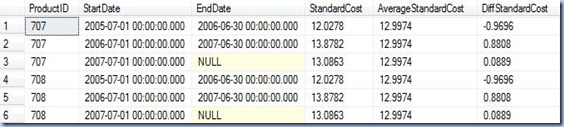

In order to retrieve the details, the query can be written with the help of a FULL JOIN as follows:

-- Price Details with Average Price for 2 Products - using JOINs

SELECT A.ProductID

, A.StartDate

, A.EndDate

, A.StandardCost

, B.AverageStandardCost

, A.StandardCost - B.AverageStandardCost DiffStandardCost

FROM [Production].[ProductCostHistory] A

JOIN ( -- average price

SELECT A.ProductID

, AVG(A.StandardCost) AverageStandardCost

FROM [Production].[ProductCostHistory] A

WHERE A.ProductID IN (707, 708)

GROUP BY A.ProductID

) B

ON A.ProductID = B.ProductID

WHERE A.ProductID IN (707, 708)

ORDERBY A.ProductID

, A.StartDate

As pointed above the partition is defined by ProductId. The same query written with window functions becomes:

-- Price Details with Average Price for 2 Products - using AVG window function

SELECT A.ProductID

, A.StartDate

, A.EndDate

, A.StandardCost

, AVG(A.StandardCost) OVER(PARTITION BY A.ProductID) AverageStandardCost

, A.StandardCost - AVG(A.StandardCost) OVER(PARTITION BY A.ProductID) DiffStandardCost

FROM [Production].[ProductCostHistory] A

WHERE A.ProductID IN (707, 708)

ORDER BY A.ProductID

, A.StartDate

As can be seen, in the second example, the AVG function is defined using the

OVER clause with PartitionId as partition. Even more, the function is used in a formula to calculate the Difference Standard Cost. More complex formulas can be written making use of multiple window functions.

The Last Record

Let’s consider the problem of retrieving the nth record. Because with aggregate functions is easier to retrieve the first or last record, let’s consider that is needed to retrieve the last Standard Price for each ProductId. The aggregate function helps to retrieve the greatest Start Date, which farther helps to retrieve the record containing the Last Standard Price.

-- Last Price Details for 2 Products - using JOINs

SELECT A.ProductID

, A.StartDate

, A.EndDate

, A.StandardCost

FROM [Production].[ProductCostHistory] A

JOIN ( -- average price

SELECT A.ProductID

, Max(A.StartDate) LastStartDate

FROM [Production].[ProductCostHistory] A

WHERE A.ProductID IN (707, 708)

GROUP BY A.ProductID

) B

ON A.ProductID = B.ProductID

AND A.StartDate = B.LastStartDate

WHERE A.ProductID IN (707, 708)

ORDERBY A.ProductID

,A.StartDate

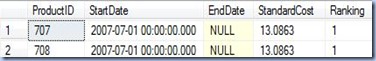

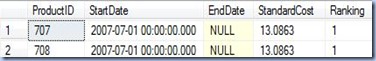

With window functions the query can be rewritten as follows:

-- Last Price Details for 2 Products - using AVG window function

SELECT *

FROM (-- ordered prices

SELECT A.ProductID

, A.StartDate

, A.EndDate

, A.StandardCost

, RANK() OVER(PARTITION BY A.ProductID ORDER BY A.StartDate DESC) Ranking

FROM [Production].[ProductCostHistory] A

WHERE A.ProductID IN (707, 708)

) A

WHERE Ranking = 1

ORDER BY A.ProductID

, A.StartDate

As can be seen, in order to retrieve the Last Standard Price, was considered the RANK function, the results being ordered descending by StartDate. Thus, the Last Standard Price will be always positioned on the first record. Because window functions can’t be used in WHERE clauses, it’s needed to encapsulate the initial logic in a subquery. Similarly could be retrieved the First Standard Price, this time ordering ascending the StartDate. The last query can be easily modified to retrieve the nth records (this can prove to be more difficult with simple average functions), the first/last nth records.

Conclusion

Without going too deep into details, I shown above two representative scenarios in which solutions based on average functions could be simplified by using window functions. In theory the window functions provide greater flexibility but they have their own trade offs too. In the next posts I will attempt to further detail their use, especially in the context of Statistics.