Introduction

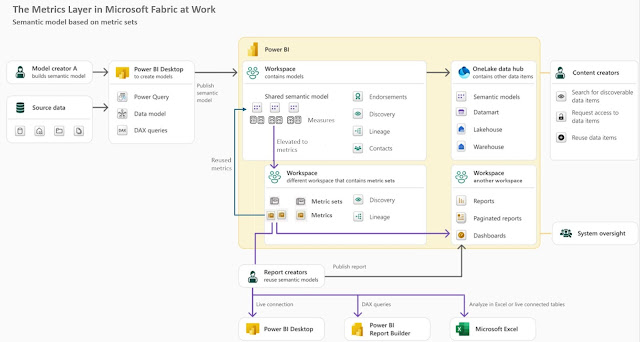

One of the announcements of this year's Microsoft Fabric Community first conference was the introduction of a metrics layer in Fabric which "allows organizations to create standardized business metrics, that are rooted in measures and are discoverable and intended for reuse" [1]. As it seems, the information content provided at the conference was kept to a minimum given that the feature is still in private preview, though several webcasts start to catch up on the topic (see [2], [4]). Moreover, as part of their show, the Explicit Measures (@PowerBITips) hosts had Carly Newsome as invitee, the manager of the project, who unveiled more details about the project and the feature, details which became the main source for the information below.

The idea of a metric layer or metric store is not new, data professionals occasionally refer to their structure(s) of metrics as such. The terms gained weight in their modern conception relatively recently in 2021-2022 (see [5], [6], [7], [8], [10]). Within the modern data stack, a metrics layer or metric store is an abstraction layer available between the data store(s) and end users. It allows to centrally define, store, and manage business metrics. Thus, it allows us to standardize and enforce a single source of truth (SSoT), respectively solve several issues existing in the data stacks. As Benn Stancil earlier remarked, the metrics layer is one of the missing pieces from the modern data stack (see [10]).

Microsoft's Solution

Microsoft's business case for metrics layer's implementation is based on three main ideas (1) duplicate measures contribute to poor data quality, (2) complex data models hinder self-service, (3) reduce data silos in Power BI. In Microsoft's conception the metric layer provides several benefits: consistent definitions and descriptions, easy management via management views, searchable and discoverable metrics, respectively assure trust through indicators.

For this feature's implementation Microsoft introduces a new Fabric Item called a metric set that allows to group several (business) metrics together as part of a mini-model that can be tailored to the needs of a subset of end-users and accessed by them via the standard tools already available. The metric set becomes thus a mini-model. Such mini-models allow to break down and reduce the overall complexity of semantic models, while being easy to evolve and consume. The challenge will become then on how to break down existing and future semantic models into nonoverlapping mini-models, creating in extremis a partition (see the Lego metaphor for data products). The idea of mini-models is not new, [12] advocating the idea of using a Master Model, a technique for creating derivative tabular models based on a single tabular solution.

A (business) metric is a way to elevate the measures from the various semantic models existing in the organization within the mini-model defined by the metric set. A metric can be reused in other fabric artifacts - currently in new reports on the Power BI service, respectively in notebooks by copying the code. Reusing metrics in other measures can mean that one can chain metrics and the changes made will be further propagated downstream.

|

| The Metrics Layer in Microsoft Fabric (adapted diagram) |

Every metric is tied to the original semantic model which allows thus to track how a metric is used across the solutions and, looking forward to Purview, to identify data's lineage. A measure is related to a "table", the source from which the measure came from.

Users' Perspective

The Metrics Layer feature is available in Microsoft Fabric service for Power BI within the Metrics menu element next to Scorecards. One starts by creating a metric set in an existing workspace, an operation which creates the actual artifact, to which the individual metrics are added. To create a metric, a user with build permissions can navigate through the semantic models across different workspaces he/she has access to, pick a measure from one of them and elevate it to a metric, copying in the process its measure's definition and description. In this way the metric will always point back to the measure from the semantic model, while the metrics thus created are considered as a related collection and can be shared around accordingly.

Resources:

[1] Microsoft Fabric Blog (2024) Announcements from the Microsoft Fabric Community Conference (link)

[2] Power BI Tips (2024) Explicit Measures Ep. 236: Metrics Hub, Hot New Feature with Carly Newsome (link)

[3] Power BI Tips (2024) Introducing Fabric Metrics Layer / Power Metrics Hub [with Carly Newsome] (link)

[4] KratosBI (2024) Fabric Fridays: Metrics Layer Conspiracy Theories #40 (link)

[5] Chris Webb's BI Blog (2022) Is Power BI A Semantic Layer? (link)

[6] The Data Stack Show (2022) TDSS 95: How the Metrics Layer Bridges the Gap Between Data & Business with Nick Handel of Transform (link)

[7] Sundeep Teki (2022) The Metric Layer & how it fits into the Modern Data Stack (link)

[8] Nick Handel (2021) A brief history of the metrics store (link)

[9] Aurimas (2022) The Jungle of Metrics Layers and its Invisible Elephant (link)

[10] Benn Stancil (2021) The missing piece of the modern data stack (link)

[11] Microsoft Learn (2024) Sempy fabric Package (link)

[12] Michael Kovalsky (2019) Master Model: Creating Derivative Tabular Models (link)

[13] Christina Obry (2023) The Power of a Metrics Layer - and How Your Organization Can Benefit From It (link)

[14] KratosBI (2024) Introducing the Metrics Layer in #MicrosoftFabric with Carly Newsome [link]