Disclaimer: This is work in progress intended to consolidate information from various sources and may deviate from them. Please consult the sources for the exact content!

Last updated: 1-Apr-2024

Delta Lake

- {def} an optimized open source storage layer that runs on top of a data lake [1]

- the default storage format in a Fabric lakehouse [1]

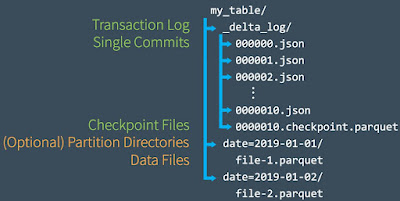

- stores data in Parquet file format

- is a variant of log-structured files

- initially developed at Databricks

- fully compatible with Apache Spark APIs [1]

- {characteristic} high reliability

- {characteristic} secure

- {characteristic} performant

- provides low latency

- {feature} data indexing

- indexes are created and maintained on the ingested data [1]

- increases the querying speed significantly [1]

- {feature} data skipping

- file statistics are maintains so that data subsets relevant to the query are used instead of entire tables - this partition pruning avoids processing data that is not relevant to the query [1]

- helps complex queries to read only the relevant subsets to fulfil query [1]

- {feature} multidimensional clustering

- uses the Z-ordering algorithm

- enables data skipping this.

- {feature} compaction

- compacts or combines multiple small files into more efficient larger ones [1]

- speeds up query performance

- storing and accessing small files can be processing-intensive, slow and inefficient from a storage utilization perspective [1]

- {feature} data caching

- highly accessed data is automatically cached to speed access for queries

- {feature} ACID transactions

- "all or nothing" ACID transaction approach is employed to prevent data corruption

- ⇐ partial or failed writes risk corrupting the data [1]

- {feature} snapshot isolation (aka SI)

- ensures that multiple writers can write to a dataset simultaneously without interfering with jobs reading the dataset [1]

- {feature} schema enforcement

- data can be stored using a schema

- {benefit} helps ensure data integrity for ingested data by providing schema enforcement [1]

- potential data corruption with incorrect or invalid schemas is avoided [1]

- {feature} transaction log checkpoints (aka checkpointing)

- optimize checkpoint querying

- the table versions are aggregated to Parquet checkpoint files [7]

- [Azure Databricks] optimizes checkpointing frequency for data size and workload [7]

- employed to provide a robust exactly once delivery semantic [1]

- {benefit} ensures that data is neither missed nor repeated erroneously [1]

- prevents the need to read all JSON versions of table history [7]

- checkpoint frequency is subject to change [7]

- manages log file removal automatically after checkpointing table versions [7]

- {feature} UPSERTS and DELETES support

- provide a more convenient way of dealing with such changes [1]

- {feature} unified streaming and batch data processing

- both batch and streaming data are handled via a direct integration with Structured Streaming for low latency updates [1]

- {benefit} simplifies the system architecture [1]

- {benefit} results in shorter time from data ingest to query result [1]

- can concurrently write batch and streaming data to the same data table [1]

- {feature} schema evolution

- schema is inferred from input data

- {benefit} reduces the effort for dealing with schema impact of changing business needs at multiple levels of the pipeline/data stack [1]

- {feature} scalable metadata handling

- {feature} predictive optimization

- removes the need to manually manage maintenance operations for delta tables [8]

- {enabled} automatically identifies tables that would benefit from maintenance operations, and then optimizes their storage

- {feature} historical retention

- {default} maintains a history of all changes made [4]

- {benefit} enhanced regulatory compliance and audit

- {recommendation} keep historical data only for their intended lifecycle [AN]

- allows to reduce storage costs [4]

- {feature} time travel

- supports querying previous table versions based on timestamp or table version [7]

- as recorded in the transaction log [7]

- {benefit} support for data rollback

- {benefit} lets users query point-in-time snapshots [5]

- {scenario} recreating analyses, reports, or outputs for debugging or auditing [7]

- {scenario} writing complex temporal queries [7]

- {scenario} fixing data mistakes [7]

- {scenario} providing snapshot isolation for a set of queries for fast changing tables [7]

- table versions accessible with time travel are determined by a combination of

- the retention threshold for transaction log files [7]

- the frequency and specified retention for VACUUM operations [7]

- if VACUUM is run daily with the default values, 7 days of data is available for time travel [7]

- {best practice} all writes and reads should go through Delta Lake [1]

- {benefit} ensure consistent overall behavior [1]

- {operation} restore

- restores a table to its earlier state

- via RESTORE command

- one can restore an already restored table [7]

- one can restore a cloned table [7]

- {permissions} MODIFY permission needed on the table being restored [7]

- {exception} one can’t restore a table to an older version where the data files were deleted manually or by vacuum [7]

- considered a data-changing operation

- Delta Lake log entries added by the RESTORE command contain dataChange set to true [7]

- {operation} recreate table

- when deleting and recreating a table in the same location, always use a CREATE OR REPLACE TABLE statement.{best practice}run OPTIMIZE Regularly

- {exception} should not be run on base or staging tables [1]

- {best practice} run VACUUM Regularly

- cleans up expired snapshots that are no longer required [1]

- {best practice} use MERGE INTO to batch changes

- {benefit} allows to efficiently rewrite queries to implement updates to archived data and compliance workflows [5]

- {best practice} use DELETE commands

- {benefit} ensures proper progression of the change [1]

- {warning} manually deleting files from the underlying storage is likely to break the table [1]

References:

[1] Azure Databricks (2023) Delta Lake on Azure Databricks

[2] Josep Aguilar-Saborit et al, POLARIS: The Distributed SQL Engine in Azure Synapse, PVLDB, 13(12), 2020 (link)

[3] Josep Aguilar-Saborit et al, Extending Polaris to Support Transactions 2024

[4] Implement medallion lakehouse architecture in Microsoft Fabric (link)

[5] Michael Armbrust et al (2020) Delta Lake: High-Performance ACID Table Storage over Cloud Object Stores, Proceedings of the VLDB Endowment13(12) (link)

[6] Bennie Haelen & Dan Davis (2024) Delta Lake: Up and Running Modern Data Lakehouse Architectures with Delta Lake

[7] Microsoft Learn (2024) Best practices: Delta Lake [link]