|

| Graphical Representation Series |

Introduction

Creating simple charts or more complex data visualizations may appear trivial for many, though their authors shouldn't forget that readers have different backgrounds, degrees of literacy, many of them not being maybe able to make sense of graphical displays, at least not without some help.

Beginners start with a limited experience and build upon it, then, on the road to mastery, they get acquainted with the many possibilities, a deeper sense is achieved and the choices become a few. Independently of one's experience, there are seldom 'yes' and 'no' answers for the various choices, but everything is a matter of degree that varies with one's experience, available time, audience's expectations, and many more aspects might be considered in time.

The following questions are intended to expand, respectively narrow down our choices when dealing with data visualizations from a data professional's perspective. The questions are based mainly on [1] though they were extended to include a broader perspective.

General Questions

Where does the data come from? Is the source reliable, representative (for the whole population in scope)? Is the data source certified? Are yhe data actual?

Are there better (usable) sources? What's the effort to consider them? Does the data overlap? To what degree? Are there any benefits in merging the data? How much this changes the overall picture? Are the changes (in trends) explainable?

Was the data collected? How, from where, and using what method? [1] What methodology/approach was used?

What's the dataset about? Can one recognize the data, the (data) entities, respectively the structures behind? How big is the fact table (in terms of rows and columns)? How many dimensions are in scope?

What transformations, calculations or modifications have been applied? What was left out and what's the overall impact?

Any significant assumptions were made? [1] Were the assumptions clearly stated? Are they entitled? Is it more to them?

Were any transformation applied? Do the transformations change any data characteristics? Were they adequately documented/explained? Do they make sense? Was it something important left out? What's the overall impact?

What criteria were used to include/exclude data from the display? [1] Are the criteria adequately explained/documented? Do they make sense?

Are similar data publicly available? Is it (freely) accessible/usable? To what degree? How much do the datasets overlap? Is there any benefit to analyze/use the respective data? Are the characteristics comparable? To what degree?

Dataviz Questions

What's the title/subtitle of the chart? Is it meaningful for the readers? Does the title reflect the data, respectively the findings adequately? Can it be better formulated? Is it an eye-catcher? Does it meet the expectations?

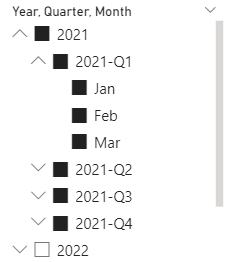

What data is shown? Of what type? At what level is the data aggregated?

What chart (type) is being used? [1] Are the readers familiar with the chart type? Does it needs further introduction/clarifications? Are there better means to represent the data? Does the chart offer the appropriate perspective? Does it make sense to offer different (complementary) perspective(s)? To what degree other perspectives help?

What items of data do the marks represent? What value associations do the attributes represent? [1] Are the marks visible? Are the marks adequately presented (e.g. due to missing data)?

What range of values are displayed? [1] What approximation the values support? To what degree can the values be rounded without losing meaning?

Is the data categorical, ordinal or continuous?

Are the axes property chosen/displayed/labeled? Is the scale properly chosen (linear, semilogarithmic, logarithmic), respectively displayed? Do they emphasize, diminish, distort, simplify, or clutter the information?

What features (shapes, patterns, differences or connections) are observable, interesting or vital for understanding the chart? [1]

Where are the largest, mid-sized and smallest values? (aka ‘stepped magnitude’ judgements). [1]

Where lie the most/least values? Where is the average or normal? (aka ‘global comparison’ judgements)” [1] How are the values distributed? Are there any outliers present? Are they explainable?

What features are expected or unexpected? [1] To what degree are they unexpected?

What features are important given the subject? [1]

What shapes and patterns strike readers as being semantically aligned with the subject? [1]

What is the overall feeling when looking at the final result? Is the chart overcrowded? Can anything be left out/included?

What colors were used? [1] Are the colors adequately chosen, respectively meaningful? Do they follow the general recommendations?

What colors, patterns, forms do readers see first? What impressions come next, respectively last longer?

Are the various elements adequately/intuitively positioned/distinguishable? What's the degree of overlapping/proximity? Do the elements respect an intuitive hierarchy? Do they match readers' expectations, respectively the best practices in scope? Are the deviations entitled?

Is the space properly used? To what degree? Are there major gaps?

Know Your Audience

What audience targets the visualization? Which are its characteristics (level of experience with data visualizations; authors, experts or casual attendees)? Are there any accidental attendees? How likely is the audience to pay attention?

What is audience’s relationship with the subject matter? What knowledge do they have or, conversely, lack about the subject? What assistance might they need to interpret the meaning of the subject? Do they have the capacity to comprehend what it means to them? [1]

Why do the audience wants/needs to understand the topic? Are they familiar, respectively actively interested or more passive? Is it able to grasp the intended meaning? [1] To what degree? What kind of challenges might be involved, of what nature?

What is their motivation? Do they have a direct, expressed need or are they more passive and indifferent? Is it needed a way to persuade them or even seduce them to engage? [1] Can this be done without distorting the data and its meaning(s)?

What are their visualization literacy skill set? Do they require assistance perceiving the chart(s)? Are they sufficiently comfortable with operating features of interactivity? Do they have any visual accessibility issues (e.g. red–green color blindness)? Do they need to be (re)factored into the design? [1]

Reflections

What has been learnt? Has it reinforced or challenged existing knowledge? [1] Was new knowledge gained? How valuable is this knowledge? Can it be reused? In which contexts?

Do the findings meet one's expectations? To what degree? Were the expectations entitled? On what basis? What's missing? What's gaps' relevance?

What feelings have been stirred? Has the experience had an impact emotionally? [1] To what degree? Is the impact positive/negative? Is the reaction entitled/explainable? Are there any factors that distorted the reactions? Are they explainable? Do they make sense?

What does one do with this understanding? Is it just knowledge acquired or something to inspire action (e.g. making a decision or motivating a change in behavior)? [1] How relevant/valuable is the information for us? Can it be used/misused? To what degree?

Are the data and its representation trustworthy? [1] To what degree?

Previous Post <<||>> Next Post

References:

[1] Andy Kirk, "Data Visualisation: A Handbook for Data Driven Design" 2nd Ed., 2019