Disclaimer: This is work in progress intended to consolidate information from various sources for learning purposes. For the latest information please consult the documentation (see the links below)!

Last updated: 10-Mar-2024

[Microsoft Fabric] Lakehouse

- {def} data architecture platform for storing, managing, and analyzing structured and unstructured data in a single location [3]

- a unified platform that combines the capabilities of

- data lake

- built on top of the OneLake scalable storage layer using and Delta format tables [1]

- support ACID transactions through Delta Lake formatted tables for data consistency and integrity [1]

- ⇒ scalable analytics solution that maintains data consistency [1]

- {capability} scalable, distributed file storage

- can scale automatically and provide high availability and disaster recovery [1]

- {capability} flexible schema-on-read semantics

- ⇒ the schema can be changed as needed [1]

- ⇐ rather than having a predefined schema

- {capability} big data technology compatibility

- store all data formats

- can be used with various analytics tools and programming languages

- use Spark and SQL engines to process large-scale data and support machine [1] learning or predictive modeling analytics

- data warehouse

- {capability} relational schema modeling

- {capability} SQL-based querying

- {feature} has a built-in SQL analytics endpoint

- ⇐ the data can be queried by using SQL without any special setup [2]

- {capability} proven basis for analysis and reporting

- ⇐ unlocks data warehouse capabilities without the need to move data [2]

- ⇐ a database built on top of a data lake

- ⇐ includes metadata

- ⇐ a data architecture platform for storing, managing, and analyzing structured and unstructured data in a single location [2]

- ⇒ single location for data engineers, data scientists, and data analysts to access and use data [1]

- ⇒ it can easily scale to large data volumes of all file types and sizes

- ⇒ it's easily shared and reused across the organization

- supports data governance policies [1]

- e.g. data classification and access control

- can be created in any premium tier workspace [1]

- appears as a single item within the workspace in which was created [1]

- ⇒ access is controlled at this level as well [1]

- directly within Fabric

- via the SQL analytics endpoint

- permissions

- granted either at the workspace or item level [1]

- users can work with data via

- lakehouse UI

- add and interact with tables, files, and folders [1]

- SQL analytics endpoint

- enables to use SQL to query the tables in the lakehouse and manage its relational data model [1]

- two physical storage locations are provisioned automatically

- tables

- a managed area for hosting tables of all formats in Spark

- e.g. CSV, Parquet, or Delta

- all tables are recognized as tables in the lakehouse

- delta tables are recognized as tables as well

- files

- an unmanaged area for storing data in any file format [2]

- any Delta files stored in this area aren't automatically recognized as tables [2]

- creating a table over a Delta Lake folder in the unmanaged area requires to explicitly create a shortcut or an external table with a location that points to the unmanaged folder that contains the Delta Lake files in Spark [2]

- ⇐ the main distinction between the managed area (tables) and the unmanaged area (files) is the automatic table discovery and registration process [2]

- {concept} registration process

- runs over any folder created in the managed area only [2]

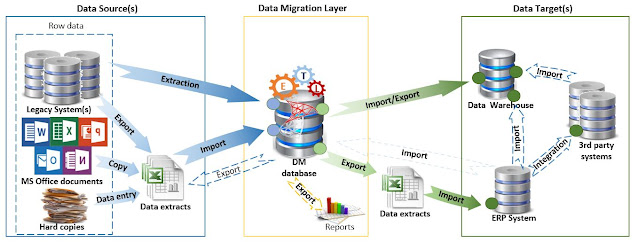

- {operation} ingest data into lakehouse

- {medium} manual upload

- upload local files or folders to the lakehouse

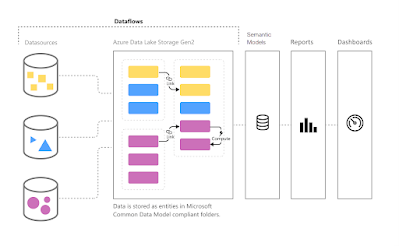

- {medium} dataflows (Gen2)

- import and transform data from a range of sources using Power Query Online, and load it directly into a table in the lakehouse [1]

- {medium} notebooks

- ingest and transform data, and load it into tables or files in the lakehouse [1]

- {medium} Data Factory pipelines

- copy data and orchestrate data processing activities, loading the results into tables or files in the lakehouse [1]

- {operation} explore and transform data

- {medium} notebooks

- use code to read, transform, and write data directly to the lakehouse as tables and/or files [1]

- {medium} Spark job definitions

- on-demand or scheduled scripts that use the Spark engine to process data in the lakehouse [1]

- {medium} SQL analytic endpoint:

- run T-SQL statements to query, filter, aggregate, and otherwise explore data in lakehouse tables [1]

- {medium} dataflows (Gen2):

- create a dataflow to perform subsequent transformations through Power Query, and optionally land transformed data back to the lakehouse [1]

- {medium} data pipelines:

- orchestrate complex data transformation logic that operates on data in the lakehouse through a sequence of activities [1]

- (e.g. dataflows, Spark jobs, and other control flow logic).

- {operation} analyze and visualize data

- use the semantic model as the source for Power BI reports

- {concept} shortcuts

- embedded references within OneLake that point to other files or storage locations

- enable to integrate data into lakehouse while keeping it stored in external storage [1]

- ⇐ allow to quickly source existing cloud data without having to copy it

- e.g. different storage account or different cloud provider [1]

- the user must have permissions in the target location to read the data [1]

- data can be accessed via Spark, SQL, Real-Time Analytics, and Analysis Services

- appear as a folder in the lake

- {limitation} have limited data source connectors

- {alternatives} ingest data directly into your lakehouse [1]

- enable Fabric experiences to derive data from the same source to always be in sync

- {concept} Lakehouse Explorer

- enables to browse files, folders, shortcuts, and tables; and view their contents within the Fabric platform [1]