Disclaimer: This is work in progress intended to consolidate information from various sources.

Last updated: 10-Mar-2024

|

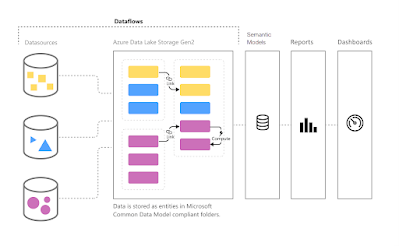

| Dataflows Architecture [3] |

[Microsoft Fabric] Dataflow (Gen1)

- a type of cloud-based ETL tool for building and executing scalable data transformation processes [1]

- a collection of tables created and managed in workspaces in the Power BI service [4]

- acts as building blocks on top of one another [4]

- includes all of the transformations to reduce data prep time and then can be loaded into a new table, included in a Data Pipeline, or used as a data source by data analysts [1]

- {benefit} promote reusability of underlying data elements

- prevent the need to create separate connections with your cloud or on-premises data sources.

- supports a wide range of cloud and on-premises sources [3]

- {operation} refreshing a dataflow

- is required before it can be consumed in a semantic model in Power BI Desktop, or referenced as a linked or computed table [4]

- can be refreshed at the same frequency as a semantic model [4]

- {concept} incremental refresh

- [Premium capacity] can be set to refresh incrementally

- adds parameters to the dataflow to specify the date range

- {contraindication} linked tables shouldn't use incremental refresh if they reference a dataflow

- ⇐ dataflows don't support query folding (even if the table is DirectQuery enabled).

- {contraindication} semantic models referencing dataflows shouldn't use incremental refresh

- ⇐ refreshes to dataflows are generally performant, so incremental refreshes shouldn't be necessary

- if refreshes take too long, consider using the compute engine, or DirectQuery mode

- {operation} deleting a workflow

- if a workspace that contains dataflows is deleted, all its dataflows are also deleted [4]

- even if recovery of the workspace is possible, one cannot recover the deleted dataflows

- ⇐ either directly or through support from Microsoft [4]

- {operation} consuming a dataflow

- create a linked table from the dataflow

- allows another dataflow author to use the data [4]

- create a semantic model from the dataflow

- allows a user to utilize the data to create reports [4]

- create a connection from external tools that can read from the CDM format [4]

- {feature} [premium] Enhanced compute engine.

- enables premium subscribers to use their capacity to optimize the use of dataflows

- {advantage} reduces the refresh time required for long-running ETL steps over computed entities, such as performing joins, distinct, filters, and group by [7]

- {advantage} performs DirectQuery queries over entities [7]

- individually set for each dataflow

- {configuration} disabled

- {configuration|default} optimized

- automatically turned on when a table in the dataflow is referenced by another table or when the dataflow is connected to another dataflow in the same workspace.

- {configuration} On

- {limitation} works only for A3 or larger Power BI capacities [7]

- {feature} [premium] DirectQuery

- allows to use DirectQuery to connect directly to dataflows without having to import its data [7]

- {advantage} avoid separate refresh schedules

- removes the need to create an imported semantic model [7]

- {advantage} filtering data

- allows to filter dataflow data and work with the filtered subset [7]

- {limitation} composite/mixed models that have import and DirectQuery data sources are currently not supported [7]

- {limitation} large dataflows might have trouble with timeout issues when viewing visualizations [7]

- {workaround} use Import mode [7]

- {limitation} under data source settings, the dataflow connector will show invalid credentials [7]

- the warning doesn't affect the behavior, and the semantic model will work properly [7]

- {feature} [premium] Computed entities

- allows to perform calculations on your existing dataflows, and return results [7]

- enable to focus on report creation and analytics [7]

- {limitation} work properly only when the entities reside in the same storage account [7]

- {feature} [premium] Linked Entities

- allows to reference existing dataflows

- one can perform calculations on these entities using computed entities [7]

- allows to create a "single source of the truth" table that can be reused within multiple dataflows [7]

- {limitation} work properly only when the entities reside in the same storage account [7]

- {feature} [premium] Incremental refresh

- adds parameters to the dataflow to specify the date range [7]

- {concept} table

- represents the data output of a query created in a dataflow, after the dataflow has been refreshed

- represents data from a source and, optionally, the transformations that were applied to it

- {concept} computed tables

- similar to other tables

- one get data from a source and one can apply further transformations to create them

- their data originates from the storage dataflow used, and not the original data source [6]

- ⇐ they were previously created by a dataflow and then reused [6]

- created by referencing a table in the same dataflow or in a different dataflow [6]

- {concept} [Power Query] custom function

- a mapping from a set of input values to a single output value [5]

- {scenario} create reusable transformation logic that can be shared by many semantic models and reports inside Power BI [3]

- {scenario} persist data in ADL Gen 2 storage, enabling you to expose it to other Azure services outside Power BI [3]

- {scenario} create a single source of truth

- encourages uptake by removing analysts' access to underlying data sources [3]

- {scenario} strengthen security around underlying data sources by exposing data to report creators in dataflows

- allows to limit access to underlying data sources, reducing the load on source systems [3]

- gives administrators finer control over data refresh operations [3]

- {scenario} perform ETL at scale,

- dataflows with Power BI Premium scales more efficiently and gives you more flexibility [3]

- {best practice} chose the best connector for the task provides the best experience and performance [5]

- {best practice} filter data in the early stages of the query

- some connectors can take advantage of filters through query folding [5]

- {best practice} do expensive operations last

- help minimize the amount of time spend waiting for the preview to render each time a new step is added to the query [5]

- {best practice} temporarily work against a subset of your data

- if adding new steps to the query is slow, consider using "Keep First Rows" operation and limiting the number of rows you're working against [5]

- {best practice} use the correct data types

- some features are contextual to the data type [5]

- {best practice} explore the data

- {best practice} document queries by renaming or adding a description to steps, queries, or groups [5]

- {best practice} take a modular approach

- split queries that contains a large number of steps into multiple queries

- {goal} simplify and decouple transformation phases into smaller pieces to make them easier to understand [5]

- {best practice} future-proof queries

- make queries resilient to changes and able to refresh even when some components of data source change [5]

- {best practice] creating queries that are dynamic and flexible via parameters [5]

- parameters serves as a way to easily store and manage a value that can be reused in many different ways [5]

- {best practice} create reusable functions

- can be created from existing queries and parameters [5]

Acronyms:

CDM - Common Data Model

ETL - Extract, Transform, Load

CDM - Common Data Model

ETL - Extract, Transform, Load

References:

[1] Microsoft Learn: Fabric (2023) Ingest data with Microsoft Fabric (link)

[1] Microsoft Learn: Fabric (2023) Ingest data with Microsoft Fabric (link)

[2] Microsoft Learn: Fabric (2023) Dataflow Gen2 pricing for Data Factory in Microsoft Fabric (link)

[3] Microsoft Learn: Fabric (2023) Introduction to dataflows and self-service data prep (link)

[4] Microsoft Learn: Fabric (2023) Configure and consume a dataflow (link)

[5] Microsoft Learn: Fabric (2023) Dataflows best practices* (link)

[6] Microsoft Learn: Fabric (2023) Computed table scenarios and use cases (link)

[7] Microsoft Lean: Power BI - Learn (2024) Premium features of dataflows (link)

Resources:

No comments:

Post a Comment