There seem to be four main sources for learning about the functionality available in the Delta Lake especially in what concerns the SQL dialect used by the Spark SQL: Databricks [1], Delta Lake [2], Azure Databricks [3], respectively the Data Engineering documentation in Microsoft Fabric [4] and the afferent certification material. Unfortunately, the latter focuses more on PySpark. So, until Microsoft addresses the gap, one can consult the other sources, check what's working and built thus the required knowledge for handling the various tasks.

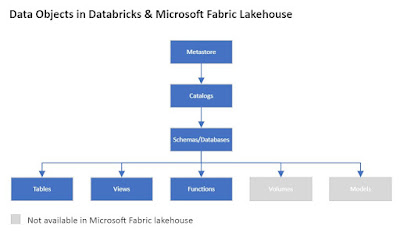

First of all, it's important to understand which the data objects available in Microsoft Fabric are. Based on [5] I could identify the following hierarchy:

According to the same source [5] the metastore contains all of the metadata that defines data objects in the lakehouse, while the catalog is the highest abstraction in the lakehouse. The database, called also a schema, keeps its standard definition - a collection of data objects, such as tables or views (aka relations) and functions. The tables, views and functions keep their standard definitions. Except the metastore, these are also the (securable) objects on which permissions can be set.

One can explore the structure in Spark SQL by using the SHOW command:

-- explore the data objects from the lakehouse SHOW CATALOGS; SHOW DATABASES; SHOW SCHEMAS; SHOW CATALOGS; SHOW VIEWS; SHOW TABLES; SHOW FUNCTIONS;

-- all tables from a database SHOW TABLES FROM Testing; -- all tables from a database matching a pattern SHOW TABLES FROM Testing LIKE 'Asset*'; -- all tables from a database matching multiple patterns SHOW TABLES FROM Testing LIKE 'Asset*|cit*';

Notes:

1) Same syntax applies for views and functions, respectively for the other objects in respect to their parents (from the hierarchy).

2) Instead of FROM one can use the IN keyword, though I'm not sure what's the difference given that they seem to return same results.

3) For databases and tables one can use also the SQL Server to export the related metadata. Unfortunately, it's not the case for views and functions because when the respective objects are created in Spark SQL, they aren't visible over the SQL Endpoint, and vice versa.

4) Given that there are multiple environments that deal with delta tables, to minimize the confusion that might result from their use, it makes sense to use database as term instead of schema.

References:

[1] Databricks (2024) SQL language reference (link)

[2] Delta Lake (2023) Delta Lake documentation (link)

[3] Microsoft Learn (2023) Azure Databricks documentation (link)

[4] Microsoft Learn (2023) Data Engineering documentation in Microsoft Fabric (link)

[5] Databricks (2023) Data objects in the Databricks lakehouse (link)

No comments:

Post a Comment