|

| Data Migration Series |

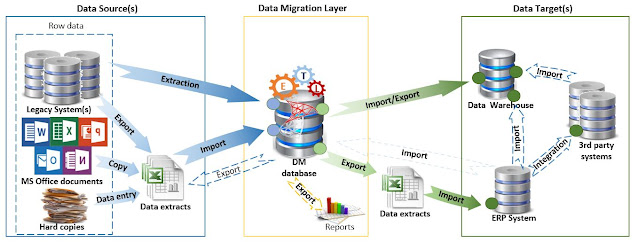

A Data Migration (DM) moves all or a subset of the data available from one or more system(s) into other system(s). For this purpose, especially in ERP Implementations, one can use a SQL Server as intermediate layer, where SSIS can be used for the data extraction and exporting, SSRS for reporting the errors, while the database engine for the heavy processing. Master Data and Data Quality Services can be used as well in certain scenarios. Therefore, SQL Server allows by design to address the various challenges related to a DM. At high level the architecture can be depicted as follows:

|

| Data Migration Architecture |

Once the decision to go with SQL Server for the DM layer is made, one needs to define which edition to use. If the DM doesn't have special requirements, one can use for it an available SQL Server instance, as long as the cumulated workloads don't create major issues. Therefore, in the past I used existing licensed versions of SQL Server to build solutions for DMs in ERP implementations, though I evaluated in each project whether it's possible to reduce the costs and remain compliant with the license requirements.

Of course, there's always the alternative of using SQL Server Express which supports databases with a maximum of 10 GB, which should be enough for most of DMs, though it has also further limitations (see [2]). There are also ways of moving around existing limitations, like splitting the logic across multiple databases.

Then there's the SQL Server Developer edition, which involves no license costs, has the full SQL Server functionality available, and can be used to build and test applications. In a recent post [1], Bob Ward, principal architect at Microsoft made several clarifications on the licenses for the Developer edition, which is "licensed for development, test, and demonstration purposes only" and "may not be used in a production environment”. Bob Ward makes the following clarifications:

(1) "Production environments include any system that is accessed by end-users for anything more than acceptance testing, environments that connects to production systems (such as Linked servers), disaster recovery or backups of production systems, and environments that are 'rotated' into production at any point in time." [1]

(2) One "cannot use Developer edition to build test data and move that same data into production" [1].

(3) One can "restore a production set of data backup for testing purposes" [1].

There are two-three impediments for using the Developer edition completely for a DM. The first, at least during Go Live and UAT, one needs to work with data coming directly from the various production environments. Secondly, the data generated by the solution are used primarily for UAT and in a second step for Production, which seems to be against the rule (2), or at least it's a grey area (which might be overlooked by Microsoft). Thirdly, some data from the production environment might need to be imported back into the DM layer for validation or enhancing the entities with data generated in the target systems.

In what concerns the first issue, the DM solution can always point to the test environments used as source, following that during UAT to copy the databases from production into the test environments. This might be anyway necessary for other purposes. Otherwise, the effort might be considerable and not working in the last phases with the data timeliness might raise other concerns.

The second issue is a matter of interpretation. The UAT phase makes sure that the data generated by the DM solution respects the criteria for Go Live. If there are no issues, the same data can be used for Go-Live. If for this is required another licensed edition, then an environment can be built only for UAT and Go Live, project phases which usually span over a couple of weeks, unless multiple migrations need to be performed at different time intervals. If the environments are in the cloud, probably the instances can be turned on and off on a as-needed basis.

One can plan for different environments between Production and Development and the environments can be on the same SQL Server as distinct databases, respectively use the Developer edition for Development, and use a different licensed edition for UAT and Production. This approach involves additional overhead in synchronizing the logic between environments. Conversely, in the case of the DM layer, the same environment can be used from beginning to the end, while the code should/must be backed-up periodically. For multiple migrations based on the same data, one should archive the data after each migration or important phase.

For the scenarios in which after migration the data are copied back to the DM solution, it's enough to have these steps performed against the UAT target system(s). This should work as long there are no differences in configuration between UAT and Production. There are however exceptions, e.g. data generated by the target systems, for which the values between Prod and UAT are different. At least in Dynamics 365 one can attempt to generate the values in the DM layer and import them as they are into the target system. It worked for many scenarios, though there can be exceptions here as well.

A more complex scenario is when data from the DM layer needs to be exported to Data Warehouses or similar solutions that can be considered as Production systems. Here a licensed edition seems to be mandatory. For other scenarios in which Master Data and/or Data Quality Services are needed, there's only the option to use the Enterprise or Developer editions.

To summarize, to reduce the overall costs for the DM, consider using an existing licensed SQL Server instance for building the solution. If separates environments need to be built, the Express edition might have some limitations though it can prove to be a viable solutions in many cases. Otherwise, consider the above workarounds for using the Developer edition, including the scenario in which distinct environments are used for Production and Development.

Resources:

[1] Microsoft Data Platform (2024) How SQL developers can maximize savings, by Bob Ward (link)

[2] Microsoft Learn (2024) Editions and supported features of SQL Server 2022 (link)

[3] Microsoft Learn (2023) Master Data Services and Data Quality Services Features Support (link)