A Software Engineer and data professional's blog on SQL, data, databases, data architectures, data management, programming, Software Engineering, Project Management, ERP implementation and other IT related topics.

Pages

- 🏠Home

- 🗃️Definitions

- 🔢SQL Server

- 🎞️SQL Server: VoD

- 🏭Fabric

- 🎞️Fabric: VoD

- ⚡Power BI

- 🎞️Power BI: VoD

- 📚Data

- 📚Engineering

- 📚Management

- 📚SQL Server

- 🎞️D365: VoD

- 📚Systems Thinking

- ✂...Quotes

- 🧾D365: GL

- 💸D365: AP

- 💰D365: AR

- 🏠D365: FA

- 👥D365: HR

- ⛓️D365: SCM

- 🔤Acronyms

- 🪢Experts

- 🔠Dataviz & BI

- 🔠D365

- 🔠Fabric

- 🔠Engineering

- 🔠Management

- 🔡Glossary

- 🌐Resources

- 🏺Dataviz

- 🗺️Social

- 📅Events

- ℹ️ About

02 February 2010

🕋Data Warehousing: Data Warehouse [DWH] (Definitions)

💎SQL Reloaded: Ways of Looking at Data II

Following PO Header’s example and logical partitioning , could be created a view based only on PO Details and the child tables, though as in this case there are no child tables – does it makes sense to create a view? Yes, why not?! The logic encapsulated by a view doesn’t resume only to joining tables, but also to provide a restrained list of attributes, computed columns or limit the scope by using constraints.

As can be seen from the above query, has been introduced several computed columns: OpenQty – the Quantity remaining to be receipt calculated as the difference between OrderedQty and ReceiptQty, ReceiptTotal – the total value of quantities receipt, OutstandingTotal – the total value corresponding to OpenQty. These three columns are quite often met in analysis and even if their definition is quite easy to follow and implement, it makes sense to include them in the view, thus reducing logic replication. In query writing best practices I was recommending to encapsulate formulas and business logic in functions, however in this case the logic is quite simple and it doesn’t makes sense to create a function for each formula used in a query, the recommendation should be used in theory (complex) formulas that are often reused.

As can be seen from the above query, has been introduced several computed columns: OpenQty – the Quantity remaining to be receipt calculated as the difference between OrderedQty and ReceiptQty, ReceiptTotal – the total value of quantities receipt, OutstandingTotal – the total value corresponding to OpenQty. These three columns are quite often met in analysis and even if their definition is quite easy to follow and implement, it makes sense to include them in the view, thus reducing logic replication. In query writing best practices I was recommending to encapsulate formulas and business logic in functions, however in this case the logic is quite simple and it doesn’t makes sense to create a function for each formula used in a query, the recommendation should be used in theory (complex) formulas that are often reused.

The alternative for partitioned view is to create an extended view that include PO Header and PO Details, such type of view is really easy to use by most of the users. I prefer to use both approaches, having thus more flexibility.

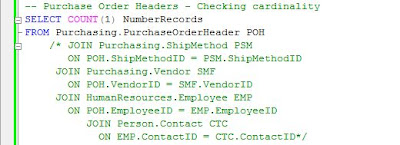

Do not forget to check the variations in cardinality! This exercise allow also to identify whether the referential integrity is kept. Even if it’s hard to believe, I found referential integrity broken in databases designed to enforce it, therefore it’s a good idea to check whether the referntial integrity is kept between the various elements.

Do not forget to check the variations in cardinality! This exercise allow also to identify whether the referential integrity is kept. Even if it’s hard to believe, I found referential integrity broken in databases designed to enforce it, therefore it’s a good idea to check whether the referntial integrity is kept between the various elements.

The same query could be also created also by using logical partitioned view, though before doing that we have to create a view also for Products:

The same query could be also created also by using logical partitioned view, though before doing that we have to create a view also for Products:

The final query based on logical partitioned views:

The final query based on logical partitioned views:

Before using the data there are 1-2 tests that needs to be done in order to assure ourselves that the data available at the various levels of details are synchronized: is the case of the SubTotal which should match the cumulated LineTotal for each line.

Before using the data there are 1-2 tests that needs to be done in order to assure ourselves that the data available at the various levels of details are synchronized: is the case of the SubTotal which should match the cumulated LineTotal for each line.

Simple reports based on PO Detail level resume in retrieving the POs matching a certain criteriea: closed POs, Open POs, POs with overreceipt, with rejections or any other business case.

Simple reports based on PO Detail level resume in retrieving the POs matching a certain criteriea: closed POs, Open POs, POs with overreceipt, with rejections or any other business case.

Note:

Note:The * (ALL) has been used only in order to minimize query’s length, as a good practice avoid using the “*” and provide instead only the list of attributes needed!

Other types of reports are showing the data at different aggregations, for example calculating the Total Amount spent for each Vendor/Product, the Outstanding Amount, etc.

More complex reports can be built with the help of window functions, for example showing the last PO Unit Price per Vendor/Product, such a report being quite useful when reevaluating the Standard Costs.

More complex reports can be built with the help of window functions, for example showing the last PO Unit Price per Vendor/Product, such a report being quite useful when reevaluating the Standard Costs.

Happy coding!

Happy coding!01 February 2010

🕋Data Warehousing: Cube (Definitions)

"A subset of data, usually constructed from a data warehouse, that is organized and summarized into a multidimensional structure defined by a set of dimensions and measures. A cube's data is stored in one or more partitions." (Microsoft Corporation, "SQL Server 7.0 System Administration Training Kit", 1999)

"Name for a dimensional structure on a multidimensional or online analytical processing (OLAP) database platform, originally referring to the simple three-dimension case of product, market, and time." (Ralph Kimball & Margy Ross, "The Data Warehouse Toolkit" 2nd Ed, 2002)

"Proprietary data structure used to store data for an online analytical processing (OLAP) end user data access and analysis tool." (Sharon Allen & Evan Terry, "Beginning Relational Data Modeling" 2nd Ed., 2005)

"A multidimensional data structure that represents the intersections of each unique combination of dimensions. At each intersection there is a cell that contains a data value." (Reed Jacobsen & Stacia Misner, "Microsoft SQL Server 2005 Analysis Services Step by Step", 2006)

"Used with online analytical processing (OLAP), data cubes are multidimensional structures built from one or more tables in a relational database(s)." (Sara Morganand & Tobias Thernstrom , "MCITP Self-Paced Training Kit : Designing and Optimizing Data Access by Using Microsoft SQL Server 2005 - Exam 70-442", 2007)

"A multidimensional structure that contains dimensions and measures." (Robert D Schneider & Darril Gibson, "Microsoft SQL Server 2008 All-in-One Desk Reference For Dummies", 2008)

"A multidimensional structure that contains dimensions and measures. Cubes are a denormalized version of either the entire database or part of the database and are used within SQL Server Analysis Services (SSAS)." (Robert D. Schneider and Darril Gibson, "Microsoft SQL Server 2008 All-In-One Desk Reference For Dummies", 2008)

"A set of data that is organized and summarized into a multidimensional structure defined by a set of dimensions and measures." (Jim Joseph, "Microsoft SQL Server 2008 Reporting Services Unleashed", 2009)

"A database object that organizes data for accessibility in an OLAP database." (Ken Withee, "Microsoft® Business Intelligence For Dummies®", 2010)

"A multi-dimensional data structure that contains an aggregate value at each point, i.e., the result of applying an aggregate function to an underlying relation. Data cubes are used to implement OLAP." (DAMA International, "The DAMA Dictionary of Data Management", 2011)

"Refers to the multidimensional data structure used to store and manipulate data in a multidimensional DBMS. The location of each data value in the data cube is based on the x-, y-, and z-axes of the cube. Data cubes are static (must be created before they are used), so they cannot be created by an ad hoc query." (Carlos Coronel et al, "Database Systems: Design, Implementation, and Management" 9th Ed, 2011)

"A set of data that is organized and summarized into a multidimensional structure that is defined by a set of dimensions and measures." (Microsoft, "SQL Server 2012 Glossary", 2012)

"A multidimensional representation of data needed for online analytical processing, multidimensional reporting, or multidimensional planning applications." (Sybase, "Open Server Server-Library/C Reference Manual", 2019)

"Cubes, also known as OLAP cubes, are preprocessed and presummarized collections of data that drastically improve query time. [...] OLAP cubes are logical structures as defined by the metadata." (Piethein Strengholt, "Data Management at Scale", 2020)

🕋Data Warehousing: Data Warehousing (Definitions)

🧭Business Intelligence: Enterprise Reporting (Part VI: Filtering)

|

| Business Intelligence Series |

Most of the values used in query constraints can be considered as parameters whose values are provided by filters; it’s even recommended to use filters instead of hard-coding values, improving thus reports’ flexibility. There are optional and mandatory parameters, in the later case a value needing to be provided in the corresponding filter, therefore such filter controls should be marked correspondingly. The actual values, especially in case of date values, should be validated before they are fetched as early as possible and should be avoided the not necessary roundtrips to the server.

In case of reports requested by Users the filters are typically specified beforehand, though in time other Users arrive to use the same report for other purposes, requesting additional filters in order to select the needed chunk of data. Adding a new filter of modifying an existing one requires a change to be done to report too, requiring in the end the availability of technical resources to develop, test and document the changes. Such gradual changes arrive to be quite expensive, therefore it should be targeted to capture and address the filtering requirements early in the design phase.

Talking from experience the focus for filtering is typically on Master Data (e.g. Customers, Vendors, Items, etc.), Document Numbers (e.g. PO Number, Invoice Number), Document Dates (e.g. Order Date, Invoice Date) or any other Dates important for the business, list of values (e.g. Statuses), and occasionally amounts or quantities, though maybe 50-70% of the attributes from a report match these criteria, the average report having between 20 to 40 attributes. The list of attributes used for filtering could be different for reports based on the same data, attribute’s selection depending on report’s type and level of detail. For example in case of a PO report it would be useful to filter for the PO Number, Vendor, Buyer, Item Number, Open/Closed Pos, etc.; in exchange in summary reports it might be needed to select the top x vendors based on the amounts spent or to select the POs with the price variation in a certain interval, etc.

A second problem is how to make use of the existing controls (text boxes, dropdowns, check boxes, radio buttons, mask controls, etc.) in order to provide Users easy-to-use and flexible filtering. The fact is that an inappropriate use of controls could decrease considerably reports’ performance, making a report even unusable. There is actually no big philosophy about how to choose the controls, this being depending mainly or attributes’ data type, of weather they are numeric, alphanumeric, date or boolean, and whether one or multi selection of values is required. List boxes, dropdowns, checkboxes and even textboxes, when the values are comma delimited, allow filtering on multiple of values, another technique for the same purpose being the use of wildcards.

Alphanumeric Attributes

As a general guideline for alphanumeric (text) values (e.g. PO Number, Serial Number) that have high value variability, it’s preferable to use a free entry text box, which could allow in addition the use of wildcards. For alphanumeric value with relatively small variability it’s preferable to use a (multi-selection) dropdown control or controls with similar features (e.g. list box control, text box + list box); such controls are populated with values from database each time the screen is loaded, fact that doesn’t recommend their use for huge list of values. Typically if the list of values have more than 100-200 values then it should be definitely considered the use of text boxes, eventually with the use of search capabilities or, given the fact that the rate of change of such lists can be ignored, the list could be cached.

Date Attributes

For date attributes it’s advisable to use a From Date/To Date combination of controls, which allow Users to limit report’s scope for a certain time interval. If one of the values is missing, then there will be a limit imposed only for the specified value. Date’s format could be enforced with the help of a mask control, which allow the selection of valid dates, or at least specify the expected format.

Numeric Attributes

For numeric values the normal text boxes would do when masked controls can’t be used, the former allowing using wildcards for the attributes that typically could be also alphanumeric (e.g. document numbers). For numeric intervals should be used a pair of controls for the upper and lower bound, having similar behavior as the date intervals. It is needed to differentiate between the various types of decimal delimiters, therefore it’s recommended to specify the delimiter used or at least transform in the background the numeric value to the format expected by database/tool. The use of controls that allow multi-selection of numeric values, excepting delimiter separated values provided in text boxes, doesn’t really makes sense unless the list of possible it’s quite small.

Boolean Attributes

Boolean data types are used to store the value of attributes that have false or true state, and eventually Null values, therefore checkboxes and radio buttons could be used for this purpose. The bi-state could be easily implemented only with a checkbox when is interested only one of the aspects, while two or three checkboxes can be used for selecting more than one value. On the other side radio buttons allow the selection of only one value, thus select either the false, true or missing values. Such filters could be also used to activate hardcoded complex logic based on multiple attributes and reduced to Boolean values.

31 January 2010

🧭Business Intelligence: Enterprise Reporting (Part V: Choosing Report’s Attributes)

|

| Business Intelligence Series |

When choosing the attributes for a report, there are several important sets of attributes which needs to be considered:

Unique identifiers

Together with the various Names (e.g. Vendor Name, Customer Name) associated with entities, a report should include also the “unique identifier” (UID) for each entity, even if formed from one or more attributes. The UID allows identifying for example if duplicate records appear in report or it could be used to match/join the data from the reports with other data sets in order to pull details or for further analysis of data. For example in a PO report over PO Shipments a unique (natural) key could be identified by using the PO Number, Line Number and Shipment Number; for a Vendor could be used the Vendor Name or the GSL (Global Supplier Location) Number, though the later it’s more adequate because it’s more general and accurate, making easier Vendor’s identification. In theory, for the same scope could be used also the database (surrogate) unique identifier from PO Shipments table, the elements dictating report’s level of detail, respectively the Vendor ID, though even if surrogate UID are easier to use in joins, they could create confusion and overload the reports, given that surrogate UIDs need to be provided also for the other elements.

Documents like Invoices include an external and internal unique identifier, the Invoice Number together with the Vendor, typically unique in a system, form the external UID, while the Document or Voucher Number is used as internal UID. The external UID it’s easier to use for external-based considerations, while the internal UID it’s easier to use for internal needs, so it makes sense to include both types of unique identifiers.

Quantities & Related Attributes

In Item-related reports, most of the times it makes sense to include also the quantities (e.g. Transaction, Ordered, Delivered, Invoiced, On-Hand Quantities) together with the Unit of Measure (UOM) in which they are represented. It has to be made distinction between the Primary UOM, the UOM in which the item is stored, and the Transactional UOM, the UOM in which the Item is transacted; for example the Purchasing UOM, Sales UOM or Transaction UOM could be different than the Primary UOM in which the item is stored in Warehouse. In such cases together with the Transactional UOM should be provided also the Primary UOM and eventually the UOM Conversion Rate, when applicable.

Prices/Amounts & Related Attributes

For Item-related reports and not only, include the various Prices (e.g. Sales, Purchase, Standard Price) together with the Currency Code used even if only one Currency is used, same rule applying also for the amounts stored (e.g. Invoice, Sales Order, Purchase amounts). For financial reports it’s advisable to show both functional amounts, the amounts in the Currency used by GL (General Ledger), and transactional amounts, the Currency used in the transaction. When the level of details allows it, show also the Quantity, Price Unit used to calculate the amounts, the eventual Exchange Rate or UOM Conversion Rate used. When available, include also the Period when the Amount was booked in the system.

Dates

Typically should be included the Document Date (e.g. Invoice Date, Order Date) and Document Creation Date, together with the other Dates important for the business or data analysis (e.g. Need By Date, GL Date, Value Date). In general the Document Date or Document Creation Date, and GL Date for financial reports, should be mandatory attributes because they could be used to segment (partition) a data set in time units (e.g. days, weeks, months, periods, years, etc.).

Statuses

The various record statuses and document statuses should be again mandatory attributes in reports. Record statuses show whether a record is active, was cancelled or marked as deleted, while document statuses show documents’ processing status, often being associated with a workflow (e.g. approval or processing workflows). The record statuses could be synchronized and even merged with the document statuses.

Either expressed as flags or list of values, statuses are essential in delimiting the data set that needs to be considered for further calculations, because often not approved documents or cancelled records have low or no relevance for the business. Not approved documents are typically not considered for the various calculations until they were not approved, while cancelled records are associated with mistakes or the lack of need. Not being able to identify the active records can mess things pretty badly, because for example there are reports that show only active, while others show all the data available in a system. Therefore showing of statuses in reports can be important in the mitigation of differences between reports, especially when dealing with calculations.

It’s advisable to have the possibility to see also the cancelled records, for example in order to analyze the amount of waste expressed as overwork or for identifying the records that were cancelled by mistake.

In reports with multiple levels of details, it can be useful to show the statuses from all levels, as statuses might not be in sync or because they have different meaning. In theory, when the statuses are in synch and especially when considering cancellations, it should be enough to consider the status from the lowest level of detail from each logical entity (e.g. PO Shipment Status when considering PO, Invoice Line status when considering Invoices, both mentioned statuses when considering POs together with Invoices), though reality can prove to be a tough world for statuses, as programming errors and other business scenarios need to be considered.

Action Owners

Include Requestors, Document Preparers, Buyers, Managers or any other type of action owners, so a user can track the direct or indirect issues back to them.

Note:

Such attributes can be used as base to calculate/reflect action owner’s performance, fact that can infringe country or organization regulations so you need to check if there are any constraints in this direction and which set of attributes might be impacted. For example might be no problem to show the Buyer, though might be a problem to show information about who created/modified the record. Eventually if needed to calculate the performance at action owner level, substitute any attribute that can be used to identify a person with a random value, however if the mapping between the action owner and value used as substitute is known (in case unique identifiers are used) or easy to get (by checking records in the system), the data might be misused.

🧭Business Intelligence: Enterprise Reporting (Part IV: Design Best Practices)

|

| Business Intelligence Series |

Report’s Design pest practices:

- Reduce unnecessary network traffic.

- Group together the attributes coming from the same table/element/module.

- Use uniform coding style, formatting and naming conventions.

- Handle missing values (NULLs).

- Avoid hard-coding values.

- Include filter information, the date when the report was run and eventually the name of the Use who run the report.

- Design the report to fit screen’s resolution (e.g. 1280x1024) [1].

- Use existing reports as templates [1].

- Consider local date/time [1], currencies, decimal formatting.

- Highlight important data accordingly (e.g. styles & conditional formatting).

- Separating labels text from expressions [1].

- Use pagination in order to improve performance for large reports.

- Avoid blank pages.

- Keep objects together.

- Use flexible easy-to-use filters.

- Validate input filter data in early phases

- Provide a report for each level of detail.

- Backup aggregated reports with detailed reports - Link reports by using drill-down, drill-though, sub-reports techniques.

- Include unique identifiers for each important data element.

- Include a running number.

- Don’t overload the reports.

Report’s Management best practices:

- Provide an integrated reporting solution and integrate/align it with Data/Information/Knowledge Management strategy/vision.

- Align reports with organization’ strategic, financial and operational plans.

- Align reports with decisions making and performance management .

- Assign an Owner for each report.

- Provide metadata (e.g. Owner, Scope, Business Case, Attributes’ meaning, when the data were last time updated etc).

- Stabilize the requirements before creating the report.

- Train the Users how to use efficiently the reports/reporting tools. - Create processes for report’s creation, modification, testing, issues reporting and mitigation.

- Track report’s usage and performance

- Write unit tests & test the reports.

- Use versioning & keep older versions.

- Document reports (queries and dependent objects, filtering parameters, scope, business case, etc).

- Minimize reporting server’s workload.

- Archive/Backup important reports.

Report’s Security best practices:

- Use role-based security.

- Protect sensitive data.

- Enforce password best practices.

- Educate users about security concerns related to reporting tool’s security and security of data usage.

- Export data to formats that don’t allow data tempering.

References:

[1] Microsoft TechNet. (2010). Best Practices for Reporting. [Online] Available from: http://technet.microsoft.com/en-us/library/cc180385.aspx (Accessed: 31 January 2010).

30 January 2010

💎SQL Reloaded: Ways of Looking at Data I (Some Basics)

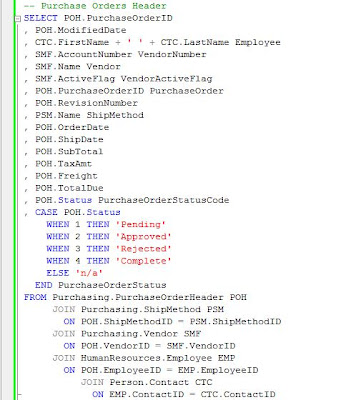

Before further using the query it’s always a good idea to check the variations in cardinality starting from the main table, and increasingly with each join, this test allowing identifying the incorrect joins. When a WHERE clause is involved, it’s easier to use the comment characters (/* … */) and uncomment each join gradually.

Before further using the query it’s always a good idea to check the variations in cardinality starting from the main table, and increasingly with each join, this test allowing identifying the incorrect joins. When a WHERE clause is involved, it’s easier to use the comment characters (/* … */) and uncomment each join gradually.

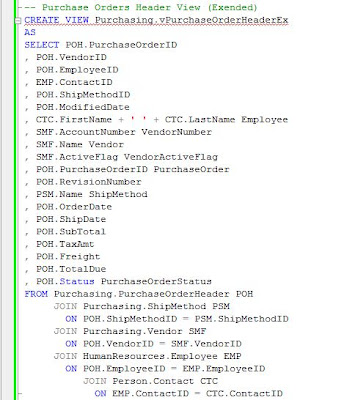

Sometimes it makes sense to encapsulate the query in a view, allowing thus to reuse the logic when needed, minimize maintainability, enforce vertical and horizontal security – to mentioned just a few of views’ benefits. It’s said that views are coming with a slight decrease in performance, though there is always a trade between performance and reusability, including in OOP. It’s recommended to use query writing best practices and target to design your queries for the best performance. Excepting the columns from the initial query, in a view it’s recommended to add also the IDs used in the joins, for faster records filtering or for easier troubleshooting. I prefer to add them in front of the other attributes, though their use could be facilitated when grouped together with the attributes from the table referenced.

Sometimes it makes sense to encapsulate the query in a view, allowing thus to reuse the logic when needed, minimize maintainability, enforce vertical and horizontal security – to mentioned just a few of views’ benefits. It’s said that views are coming with a slight decrease in performance, though there is always a trade between performance and reusability, including in OOP. It’s recommended to use query writing best practices and target to design your queries for the best performance. Excepting the columns from the initial query, in a view it’s recommended to add also the IDs used in the joins, for faster records filtering or for easier troubleshooting. I prefer to add them in front of the other attributes, though their use could be facilitated when grouped together with the attributes from the table referenced.

Probably you wonder what’s the meaning of Ex postfix, as you probably deducted from the comment, it stands for Extended. In general I prefer to create a view for each level of detail, in this case one for PO Headers and another one for PO Details, and to use nested views when the decrease in performance is minimal, or especially when the views include complex logic I don’t want to replicate. In addition the views could be reused by Users who don’t have to replicate the logic already included in view, making the analysis of data much easier. On the other side, when using views from various modules (e.g. Account Payables vs Pos, Sales vs. Pos), there are many elements which arrive to be used more than once, in general Master Data (e.g. Products, Vendors, Customers, Locations, etc.), thus in order to avoid these type of not necessary joins, I prefer to partition logically the views – two views won’t contain similar data, though this doesn’t mean that won’t be views that contradict this rule.

Probably you wonder what’s the meaning of Ex postfix, as you probably deducted from the comment, it stands for Extended. In general I prefer to create a view for each level of detail, in this case one for PO Headers and another one for PO Details, and to use nested views when the decrease in performance is minimal, or especially when the views include complex logic I don’t want to replicate. In addition the views could be reused by Users who don’t have to replicate the logic already included in view, making the analysis of data much easier. On the other side, when using views from various modules (e.g. Account Payables vs Pos, Sales vs. Pos), there are many elements which arrive to be used more than once, in general Master Data (e.g. Products, Vendors, Customers, Locations, etc.), thus in order to avoid these type of not necessary joins, I prefer to partition logically the views – two views won’t contain similar data, though this doesn’t mean that won’t be views that contradict this rule.

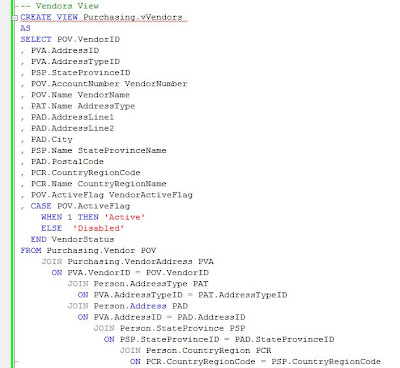

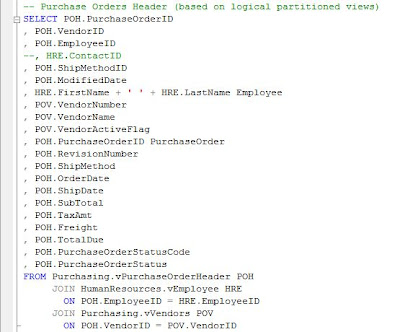

Using the logical partitioning of views, an alternative view on PO Headers would be created without showing the Vendor end Employee information, following to use if necessary the corresponding views when needed. AdventureWorks includes a view on Vendors called Purchasing.vVendor though the level of detail is at Contact level, and a Vendor could have more than one contacts, therefore the view can’t be used for this purpose, following to create a second view called Purchasing.vVendors (if the name creates confusion you could rename it or rename the Purchasing.vVendor to reflect the fact that the level is Vendor Contacts, for example Purchasing.vVendorContacts).

The problem with the Purchasing.vPurchaseOrderHeaderEx view is that if a user needs additional information about Vendors not available in the view, I’ll have to add the attributes, arriving in the end to create most of the logic available in Purchasing.vVendors view, or the User will rejoin the Purchasing.vVendors view with Purchasing.vPurchaseOrderHeaderEx, having a redundant link to Vendors. The simplified version of the view is the following:

The problem with the Purchasing.vPurchaseOrderHeaderEx view is that if a user needs additional information about Vendors not available in the view, I’ll have to add the attributes, arriving in the end to create most of the logic available in Purchasing.vVendors view, or the User will rejoin the Purchasing.vVendors view with Purchasing.vPurchaseOrderHeaderEx, having a redundant link to Vendors. The simplified version of the view is the following:

Now the three views - Purchasing.vVendors, HumanResources.vEmployee and Purchasing.vPurchaseOrderHeader could be joined in a query to obtain the same result as Purchasing.vPurchaseOrderHeaderEx view, this approach offering much more flexibility, even if the decrease in performance is higher, though the performance should be in theory better than joining Purchasing.vPurchaseOrderHeader_Ex with Purchasing.vVendors and HumanResources.vEmployee in order to cover the missing attributes. Unfortunately there is another small hitch – the HumanResources.vEmployee view doesn’t contain the ContactID, therefore the view needing to be modified in case is needed.

Now the three views - Purchasing.vVendors, HumanResources.vEmployee and Purchasing.vPurchaseOrderHeader could be joined in a query to obtain the same result as Purchasing.vPurchaseOrderHeaderEx view, this approach offering much more flexibility, even if the decrease in performance is higher, though the performance should be in theory better than joining Purchasing.vPurchaseOrderHeader_Ex with Purchasing.vVendors and HumanResources.vEmployee in order to cover the missing attributes. Unfortunately there is another small hitch – the HumanResources.vEmployee view doesn’t contain the ContactID, therefore the view needing to be modified in case is needed.

The logical partitioning allows also more flexibility and relatively increased performance when doing data analysis, for example in order to get the volume purchased per Vendor, the Purchasing.vPurchaseOrderHeader could be used to aggregate the data and join the resulting dataset with Purchasing.vVendors view.

The logical partitioning allows also more flexibility and relatively increased performance when doing data analysis, for example in order to get the volume purchased per Vendor, the Purchasing.vPurchaseOrderHeader could be used to aggregate the data and join the resulting dataset with Purchasing.vVendors view.

A second look at the same sets of data, Vendors and PO Headers, could be based on a left join between the two, thus seeing also the Vendors for which no PO was approved or completed; the query could be used to return the same data as the previous data set (see ‘Vendors with Pos’ constraint). This second query offers greater flexibility, coming with a small decrease in performance and, in addition, must be taken care of NULL values.

A second look at the same sets of data, Vendors and PO Headers, could be based on a left join between the two, thus seeing also the Vendors for which no PO was approved or completed; the query could be used to return the same data as the previous data set (see ‘Vendors with Pos’ constraint). This second query offers greater flexibility, coming with a small decrease in performance and, in addition, must be taken care of NULL values.

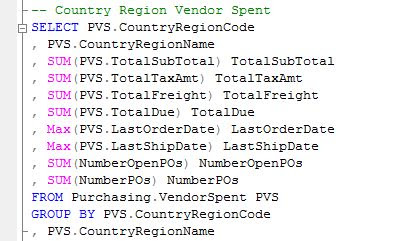

The above examples were showing the cumulated values at Vendor level, what happens when we need to aggregate the data at CountryRegionName level? For this purpose could be used any of the two above queries, and simply aggregate the data at CountryRegionName level. Given the fact that the second query provides greater flexibility, it could be encapsulated in view (e.g. , thus allowing to easily aggregate the data at any level we want – CountryRegionName, StateProvinceName or even City.

The above examples were showing the cumulated values at Vendor level, what happens when we need to aggregate the data at CountryRegionName level? For this purpose could be used any of the two above queries, and simply aggregate the data at CountryRegionName level. Given the fact that the second query provides greater flexibility, it could be encapsulated in view (e.g. , thus allowing to easily aggregate the data at any level we want – CountryRegionName, StateProvinceName or even City. Sometimes simple aggregations are not enough, Users needing more from the data – for example seeing which is the last/first (open) PO placed in the system for each Vendor. Such a report looks simple as request, though not always so easy to provide. Before the introduction of window functions in SQL Server and analytic functions in Oracle, the techniques for such requests were not so elegant… Here it is the SQL server implementation using RANK ranking window function in order to get the Last/First PO, the same aggregated amounts from the previous query being shown with the help of aggregate window functions.

Sometimes simple aggregations are not enough, Users needing more from the data – for example seeing which is the last/first (open) PO placed in the system for each Vendor. Such a report looks simple as request, though not always so easy to provide. Before the introduction of window functions in SQL Server and analytic functions in Oracle, the techniques for such requests were not so elegant… Here it is the SQL server implementation using RANK ranking window function in order to get the Last/First PO, the same aggregated amounts from the previous query being shown with the help of aggregate window functions.

Note:

Note:Most of the data analysis focuses only for a certain list of vendors, countries or regions, for a certain time interval, or for any other special requirements, for example pending Pos , in order to approximate the future expenses, or for the rejected Pos, in order to see the volume of waste/rework or to quantify the lost opportunities. The above queries were created for general cases and they could be modified for specific requirements if needed.

28 January 2010

💎SQL Reloaded: Query Writing I (Best Practices)

- Use ANSI-compliant syntax as much as possible.

- Use aliases for all the tables.

- Design for performance reusability and security.

- Reduce unnecessary network traffic.

- Group together the attributes coming from the same table.

- Rename columns in order to avoid confusion, though don’t overreact with this practice.

- Use uniform coding style, formatting and naming conventions

- Use indexed join predicates.

- Learn about the strengths/weaknesses of each feature before using it.

- Use versioning & keep older versions. - Test the queries.

- Write unit tests first [2].

- Handle missing values (NULLs).

- Document database objects inline and specific documents (e.g. Data Dictionaries, Functional Specifications).

- Refactor code

- Use SQL tunings tools.

- Write tiny chunks of code: encapsulate formulas and business logic in functions [2], avoid inline scalar functions.

- Defensive coding: use exception handling, consider all scenarios.

Things to avoid:

- Complex expressions in search conditions [1].

- Join predicates on expressions [1].

- Expressions over columns in local predicated [1].

- Data types mismatches on join columns [1].

- Non-equality join predicates [1].

- Unnecessary outer joins [1].

- Redundant predicates [1].

- Multiple aggregations with DISTINCT.

- Build queries dynamically unless necessary.

- Techniques that use full-table scan: functions that don’t use/perform poor on indexes, wildcards at the beginning of a word.

- Use more attributes/records than needed (particular case: Use * instead of specifying the attributes).

- Using nested views.

- Rely entirely on the code created by wizards and other automation tools.

- UNION unless really needed: use UNION ALL.

- UNIONS instead of conditional-base code (e.g. CASE, DECODE) or self-joins.

- Using temporary tables.

- Server side cursors.

- Procedural queries (e.g. loops, cursors) rather than using set-based queries.

- Redundant logic/code.

- Negations on constraints.

- Code facilitating SQL injection: use parameterized objects.

- Hard-coding values.

- Undocumented functionality.

- Use GROUP BY on final sub-query when it could be used in a sub-query.

- Multiple self-joins/joins to same table instead of GROUP BY.

- Repetitive calls to the same function and same parameters.

- Use constants in ORDER BY clause.

- Create too many versions of the same query.

Note:

Please note that there are situations and situations, a technique not recommended in general could prove to offer better performance than alternatives (e.g. : recursive simulation + temporary table vs. hierarchical self-joins on SQL Server 2000 ), while for others there are several aspects that need to be considered, for example the trade in performance vs. reusability. Even if most of the database vendors adhere to SQL ANSI standard, in the end each functionality could be implemented differently and vendors could provide additional functionality, therefore could be considered specific best practices for each functionality/vendor.

References:

[1] IBM (2010) Best Practice - Writing and Tuning Queries for Optimal Performance [link]

[2] Oracle (2009) Cleaning Up PL/SQL Practices, by S. Feuerstein [link]

Resources:

Microsoft TechNet. (2010). SQL Server - Best Practices [link]

27 January 2010

🧭Business Intelligence: Cognos & SQL Server 2008 - The First Report (Test Drive)

Now I was ready to test the tool and create several reports, for this needing to create first a data source - a connection to an existing database with several tables on which the reports will be based. SQL Server 2008's AdventureWorks database seemed to be adequate for this test, in plus I know there are many SQL Server professionals already using it for testing or tutorials.

Step 1: Creating the SQL Server account

a. the Login name (e.g. cognosuser)

b. the authentification – because Cognos doesn’t supports Windows-based authentication, choose SQL Server authentication, enter the Password and confirm it. By default the ‘Enforce password expiration’ is checked, though this option is not needed for the current requirement so unchek it.

c. Select the Default database, the database you’re intending to base your reports on (e.g. AdventureWorks)

d. Select the ‘Default Language’ (e.g. English)

Navigate to ‘Use Mapping’ tab and in ‘User mapped to this login’ choose the databases you would like to give access (e.g. AdventureWorks) and for each database selected you’ll have to select the schemas in scope (e.g. HumanResources, Person, Production, Purchasing and Sales), while in’Database role membership for’ select the db_datareader database level role name, then you can click ‘Ok’.

Navigate to ‘Use Mapping’ tab and in ‘User mapped to this login’ choose the databases you would like to give access (e.g. AdventureWorks) and for each database selected you’ll have to select the schemas in scope (e.g. HumanResources, Person, Production, Purchasing and Sales), while in’Database role membership for’ select the db_datareader database level role name, then you can click ‘Ok’. It’s always a good idea to test the new account before attempting to use it from an external application, this allows catching the eventual issues early in the process, saving your and others’ time!

It’s always a good idea to test the new account before attempting to use it from an external application, this allows catching the eventual issues early in the process, saving your and others’ time!

Step 2: Creating a Data Source

Step 3: Creating a Report

Step 3: Creating a Report

Instead of using Report Studio, the standard tool for designing reports, for simple reports or queries could be used instead the Query Studio, an easy-to-use authoring tool, the creation of a report resuming to a simple drag and drop. For this you’ll have to open the Query Studio from the Launch item found on the top menu, this action opening a new browser window, in a next step following to select the package (actually the data source) you want to use. In ‘Insert Data’ mode select a table from the list (e.g. Location) and drag and drop it into the right section, all table’s columns being shown together with a sample set of data.

You could double click on each column and give it a meaningful name – is a good practice to separate the words with spaces though not really necessarily. By clicking on report’s Title you could change its actual value and add a Subtitle if necessary. In ‘Change Layout’ you could change report’s layout by applying different Font, Border and Conditional Styles, much like in Excel, show row numbers by clicking the ‘Show row numbers’ from Change Layout/Set Web Page Size, etc. Save the report once you’ve done the changes, the report will look something like the one from the below image:

Step 4: Running the Report

Step 4: Running the Report

From ‘Run Report’ Menu item you could choose to run the report mainly in three modes – ‘Run with All Data’, ‘Preview with Limited Data’ or ‘Preview with No Data’. Additionally you can export the report to PDF (Portable Document Format), several versions of Excel (2000, 2002 and 2007), CSV (Comma Separated Values) and XML (eXtensible Markup Language).

25 January 2010

🗄️Data Management: Data Quality Dimensions (Part VII: Structuredness)

|

| Data Management Series |

In general, the term structured data refers to structures that can be easily perceived or known, that raises no doubt on structure’s delimitations. Unstructured data refers to textual data and media content (video, sound, images), in which the structural patterns even if exist they are hard to discover or not predefined, while semi-structured data refers to islands of structured data stored with unstructured data, or vice versa.

Data structuredness is important especially when is considered the processing of data with the help of machines, the correct parsing of data being highly dependent on the knowledge about the data structure, either defined beforehand or deducted. The more structured the data and the more evident and standardized the structure, the easier should be to process the data. Merrill Lynch estimates that 85% of the data in an organization are in unstructured form, most probably this number referring to semi-structured data too. To make such data available in a structured format is required an important volume of manual work combined eventually with reliable data/text mining techniques, a fact that reduces considerably the value of such data.

Text, relational, multidimensional, object, graph or XML-based DBMS are in theory the most easily to process, map and integrate though that might not be so simple as it looks given the different architectures vendors come with, the fact that the structures evolve over time. To bridge the structure and architectural differences, many vendors make it possible to access data over standard interfaces (e.g. ODBC), though there are also systems that provide only proprietary interfaces, making data difficult to obtain in an automated manner. There are also other types of technical issues related mainly to the different data types and data formats, though such issues can be easily overcome.

In the context of Data Quality, the structuredness dimension refers to the degree the structure in which the data are stored matches the expectations, the syntactic set of rules defining it, being considered across the whole set of records. Even a minor inadvertence in the structure of a record could lead to processing errors and unexpected behavior. The simplest example is a delimited text file - if any of the character sets used to delimit the structure of the file is available in the data itself, then there are high chances that the file will be parsed incorrectly, or the parsing will fail unless the issues are corrected.

References:

[1] Barry W Boehm et al (1978) "Characteristics of software quality"

[2] The Register (2006) "Structured data is boring and useless", by D. Nortfolk (link)

[3] P Wood (?) "Semi-structured Data"

23 January 2010

🧊🎡Data Warehousing: ETL (Part I: It's All about Synchronization)

|

| Data Warehousing Series |

In a previous post on ETL: SSIS packages vs. SQL code I mentioned that there are three types of synchronization - of scope, business logic and data. The first type targets synchronizing the filters used to select the data, the second to synchronize the logic used in data processing, when is the case, and third to work with the same unaltered copy of the data.

The synchronization of scope is achieved by enforcing the same set of constraints to related data elements with the purpose of keeping the referential integrity between the various data elements. They involve parent-child relations like Purchase orders (PO) Headers/Lines or reference/referenced relations like PO Lines and Products or Vendors. Such relationships can involve datasets coming from distinct systems, usually involving different architecture - e.g., Products or Bill of Materials master data that could come from a Product Management Information (PMI) system.

The synchronization of business logic is usually a consequence of the synchronization of scope and concerns the set of business rules that must be applied in the logic used. For example, is need to consider Products or Vendors only for the open POs. Therefore, that would involve the duplication of logic for open POs across multiple database objects, logic that needs to be synchronized accordingly. One can reference directly the object encapsulating the logic for open POs, though that would create a recursive reference as the logic references the Products and/or Vendors as well. With one or two tricks this can be avoided.

It should be targeted to cover all three types of synchronization even if it complicates the design of the solutions, as it's recommended in general to apply defensive architecting/programming. The increase in complexity is relative if one considers the effort needed for troubleshooting, plumbing or even redesign that needs to be done to fix the issues.

About Me

- Adrian

- Koeln, NRW, Germany

- IT Professional with more than 25 years experience in IT in the area of full life-cycle of Web/Desktop/Database Applications Development, Software Engineering, Consultancy, Data Management, Data Quality, Data Migrations, Reporting, ERP implementations & support, Team/Project/IT Management, etc.