A Software Engineer and data professional's blog on SQL, data, databases, data architectures, data management, programming, Software Engineering, Project Management, ERP implementation and other IT related topics.

Pages

- 🏠Home

- 🗃️Definitions

- 🔢SQL Server

- 🎞️SQL Server: VoD

- 🏭Fabric

- 🎞️Fabric: VoD

- ⚡Power BI

- 🎞️Power BI: VoD

- 📚Data

- 📚Engineering

- 📚Management

- 📚SQL Server

- 🎞️D365: VoD

- 📚Systems Thinking

- ✂...Quotes

- 🧾D365: GL

- 💸D365: AP

- 💰D365: AR

- 🏠D365: FA

- 👥D365: HR

- ⛓️D365: SCM

- 🔤Acronyms

- 🪢Experts

- 🔠Dataviz & BI

- 🔠D365

- 🔠Fabric

- 🔠Engineering

- 🔠Management

- 🔡Glossary

- 🌐Resources

- 🏺Dataviz

- 🗺️Social

- 📅Events

- ℹ️ About

30 September 2010

🚧Project Management: Project Management [PM] (Definitions)

29 September 2010

💠🛠️SQL Server: Administration (Part VI: Troubleshooting Who is Active)

30 August 2010

📊Business Intelligence: Scorecards (Definitions)

"Online, real time reporting to monitor performance against targets." (Paulraj Ponniah, "Data Warehousing Fundamentals for IT Professionals", 2010)

"An approach to rating risk used in many areas of risk management. A scorecard is most commonly used for rating customer risk (often individuals) as the probability of default in financial risk management." (Annetta Cortez & Bob Yehling, "The Complete Idiot's Guide To Risk Management", 2010)

"An application that helps organizations measure and align the strategic and tactical aspects of their businesses, comparing organizational and individual performance to goals and targets. (Laura Reeves, A Manager's Guide to Data Warehousing, 2009)

"A collection of information - organized in a single view - that tracks an organization's progress toward a specific goal." (Ken Withee, "Microsoft® Business Intelligence For Dummies®", 2010)

"Performance management tools that help managers track performance against strategic goals" (Daniel Linstedt & W H Inmon, "Data Architecture: A Primer for the Data Scientist", 2014)

"Scorecards are used by enterprises to measure the progress against the enterprise strategy. Scorecards represent performance trends over a period of time such as monthly/quarterly/yearly; whereas dashboards indicate the status of a performance metric at a given point in time. In contrast, dashboards are used to represent actual granular data, they contain data that is more recent than that of scorecards." (Saumya Chaki, "Enterprise Information Management in Practice", 2015)

"A graphical representation of valid values for a source column or output of a rule in profile results. Use scorecards to measure data quality progress." (Informatica)

"A representation of summarized performance measurements representing progress towards the implementation of long-term goals. A scorecard provides static measurements of performance over or at the end of a defined interval." (ISQTB)

"A scorecard is a visual display of the most important information needed to achieve one or more objectives, consolidated and arranged on a single screen so the information can be monitored at a glance. Unlike dashboards that display actual values of metrics, scorecards typically display the gap between actual and target values for a smaller number of key performance indicators." (Intrafocus)

15 August 2010

Data Security: SQL Injection I - Introduction

If you are working in IT, most probably you’ve heard already about SQL Injection, if not then might be it’s a good idea to ask your colleagues and eventually your IT manager if your company has any policies related to it. If you are working for a software vendor or a consultancy company then SQL Injection countermeasure techniques might be quite well positioned in the list of best practices in what concerns the development of Web/Desktop Applications, Web Services or database-related logic adopted by your company. If you are working for a company, other than the two mentioned above, and have various software projects on the role or already in house, then most probably you’ll have to ask if the software vendors you are working with have took into consideration the SQL Injection threats and proved their solutions against them. On contrary, if you have nothing to do with IT at all, it might still be a good idea to ask your IT department if they have anything in place related to SQL Injection – Security Policy, security best practices, etc.

Definition

Wikipedia defines SQL Injection as “a code injection technique that exploits a security vulnerability occurring in the database layer of an application” [3], the code injection being defined as “the exploitation of a computer bug that is caused by processing invalid data” [4]. For a programmer the definition is acceptable, though for other type of professionals it might not be so clear what’s about, especially when they are not familiar with IT terminology. I find more clear the definition provided by J. Clarke et. al, who in his book SQL Injection Attacks and Defense, defines SQL Injection as the vulnerability that results when you give an attacker the ability to influence the Structured Query Language (SQL) queries that an application passes to a back-end database” [2]. I will slightly modify the last definition and say that the SQL injection is a security vulnerability residing in the possibility to alter the intended behavior of the SQL Queries passed to the database.

Some Background

At the beginning of our century, with the increase importance of Web Applications whose availability over WAN/Internet (networks) brought new security issues, the SQL Injection became a really hot topic given the damages such techniques could do to an application, with just a few tricks the “hacker” having the possibility to enter in the application and even in the machine hosting the database used, entering thus in the possession of sensitive information, and above all having the possibility of damaging the database. J. Clarke et. al remarks that the first connection between web applications and SQL injection is widely accredited to Rain Forest Puppy, who in an article titled “NT Web Technology Vulnerabilities” (see “ODBC and MS SQL server 6.5” section) written in 1998 for Phrack, an e-zine written by and for hackers [2], was describing the behavior specific to SQL Injection in relation to MS SQL Server 6.5.

I remember when my boss break us the news that we have to protect urgently our applications against SQL Injection, having to redesign some of the database objects and components in order to protect our applications against such techniques. I was then in my first or second year of professional experience, so the topic was new and quite intriguing not only for myself but also for my colleagues, some of them having a few more years of professional programming experience that I did and, I hope I’m not mistaking, none (or few) of them actually have heard about it. It was interesting to check how simple techniques could do so much damage. At those times there were few articles on SQL Injection and specific countermeasure techniques, not to mention best practices, so we were kind of groping in the dark in finding a countermeasure to the problem.

State of Art

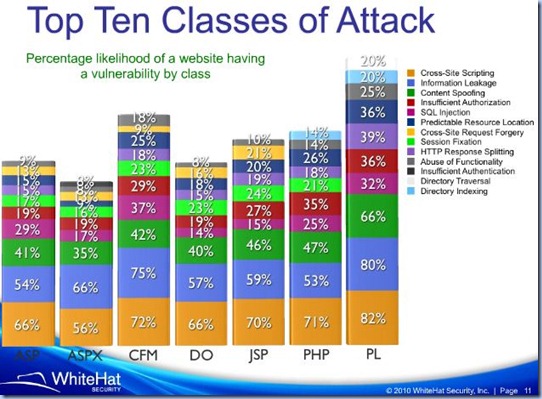

Since then, the number of search engines hits on the topic is quite impressive, many professionals approaching the problem in their way, Vendors started to design their solutions and make aware programmers on best practices in order to minimize this type of security threat, books were written on this topic, the awareness increased between developers and other type of IT professionals. Even if considerable effort has been made into this direction, and the topic appears often on the blogs, there are still many web sites not designed to address SQL Injection concerns. In 2007, The WhiteHat Security, placed SQL Injection on 5th position in top of vulnerabilities, estimating that 1 out of 5 web sites is vulnerable to SQL Injection [1]. In 8th Web Security Report based on 2009 data provided by WhiteHat Security[5], and as it seems also in 9th report [6], SQL Injection remains on the same position, what’s interesting to remark is the split per scripting technology provided in [6]:

Resources

If the topic made you curious, you could find out more with just a simple search on the Web. There are many professionals who wrote on this topic, however it’s a good idea to start directly with the resources provided by the RDBMS vendors, for example Microsoft through its Security Research & Defense blog, in SQL Injection Attacks post has an interesting list of resources on this topic. A nice document on ‘How to write SQL injection proof PL/SQL’ comes from Oracle, an interesting presentation on ‘SQL Injection Myths and Fallacies’ was made at MySQL Conference & Expo, etc.

References:

[1] WhiteHat Security. [2007]. Website Security Statistics Report. [Online] Available from: http://www.whitehatsec.com/home/assets/WPStatsreport_100107.pdf (Accessed: 15 August 2010)

[2] J.Clarke et. al (2009). SQL Injection Attacks and Defense. Elsevier. ISBN: 978-1-59749-424-3

[3] Wikipedia. (2010). SQL Injection. [Online] Available from: http://en.wikipedia.org/wiki/Sql_injection (Accessed: 15 August 2010)

[4] Wikipedia. (2010). Code Injection. [Online] Available from: http://en.wikipedia.org/wiki/Code_injection (Accessed: 15 August 2010)

[5] WhiteHat Security. [2009]. Website Security Statistic Report, 8th Ed. [Online] Available from: http://www.whitehatsec.com/home/assets/WPstats_fall09_8th.pdf (Accessed: 15 August 2010)

[6] WhiteHat Security. [2010]. Website Security Statistic Report, 9th Ed. [Online] Available from: http://www.slideshare.net/jeremiahgrossman/whitehat-security-9th-website-security-statistics-report-3995771 (Accessed: 15 August 2010)

13 August 2010

💎SQL Reloaded: Temporary Tables vs. Table Variables and TempDB

-- creating the temporary tables CREATE TABLE #temp ( id int NOT NULL , value nvarchar(50) NOT NULL) -- retrieving the metadata SELECT t.name table_name , s.name column_name , t.type , t.type_desc , t.create_date FROM tempdb.sys.tables t JOIN tempdb.sys.columns s ON t.object_id = s.object_id WHERE t.name LIKE '%temp%' -- dropping the temporary table -- DROP TABLE #temp -- see the 2nd note!

Note:

By changing the width of table_name column could be seen that object’s name corresponding to the temporary tables is a combination from table’s name and, according to K. Henderson, the number designating the connection that owns the table.

If the temporary table is stored in tempdb and metadata are stored about it, what’s happening with a temporary table? Here’s the answer:

-- creating the table variable DECLARE @temp TABLE( id int NOT NULL , value nvarchar(50) NOT NULL) -- retrieving the metadata SELECT t.name table_name , s.name column_name , t.type , t.type_desc , t.create_date FROM tempdb.sys.tables t JOIN tempdb.sys.columns s ON t.object_id = s.object_id WHERE t.name LIKE '%#%' AND DateDiff(ss, t.create_date, GetDate()) BETWEEN -2 AND 2

As can be seen I had to put a little more effort in order to see a table variable’s metadata. As there is no name that could be used in order to identify the table, as object’s name is stored as a hex number, I had to restrain the list of tables by using the timestamp. Excepting the name, the metadata stored about the two types of tables are identical for the same table definition. Actually their definition is similar with the one of a “standard” table:

-- creating a "standard" table CREATE TABLE temp( id int NOT NULL , value nvarchar(50) NOT NULL) -- retrieving the metadata SELECT t.name table_name , s.name column_name , t.type , t.type_desc , t.create_date FROM sys.tables t JOIN sys.columns s ON t.object_id = s.object_id WHERE t.name LIKE '%temp%' -- dropping the table -- DROP TABLE temp -- see the 2nd note!

Notes:

(1) For exemplification I used a restrained list of attributes, when comparing the various table’s metadata could be used instead a SELECT * statement. The above examples reflect also the differences in declaring the three types of tables.

[1] Haripriya SB (2024) Do NOT drop #temp tables (link)

10 August 2010

💎SQL Server: Information Schema Views 🆕

SQL Server 2008 makes available the Information Schema Views through INFORMATION_SCHEMA schema available in each database storing information about all database objects contained in the respective database. In order to retrieve the views available under the INFORMATION_SCHEMA schema and their definitions, could be used the following query:

-- retrieving objects' definition for INFORMATION_SCHEMA SELECT s.name [schema_name] , o.name [object_name] , sm.definition FROM sys.all_sql_modules sm JOIN sys.all_objects o ON sm.object_id = o.object_id JOIN sys.schemas s ON o.schema_id = s.schema_id WHERE s.name = 'INFORMATION_SCHEMA' ORDER BY o.name

Unfortunately there are no descriptions stored together with the database objects, and even if most of the view names are self-describing, there are a few cases in which more information are required. Here is the list again with the views and their descriptions together with the link to the MSDN section that gives more information on view’s definition:

| No | View | Description |

1.

| CHECK_CONSTRAINTS | Returns one row for each CHECK constraint |

| 2. | COLUMN_DOMAIN_USAGE | Returns one row for each column that has an alias data type |

| 3. | COLUMN_PRIVILEGES | Returns one row for each column that has a privilege that is either granted to or granted by |

| 4. | COLUMNS | Returns one row for each column |

| 5. | CONSTRAINT_COLUMN_USAGE | Returns one row for each column that has a constraint defined on it |

| 6. | CONSTRAINT_TABLE_USAGE | Returns one row for each table that has a constraint defined on it |

| 7. | DOMAIN_CONSTRAINTS | Returns one row for each alias data type that has a rule bound to it |

| 8. | DOMAINS | Returns one row for each alias data type |

| 9. | KEY_COLUMN_USAGE | Returns one row for each column that is constrained as a key |

| 10. | PARAMETERS | Returns one row for each parameter of a user-defined function or stored procedure |

| 11. | REFERENTIAL_CONSTRAINTS | Returns one row for each FOREIGN KEY constraint |

| 12. | ROUTINES | Returns one row for each stored procedure and function |

| 13. | ROUTINE_COLUMNS | Returns one row for each column returned by table-valued functions |

| 14. | SCHEMATA | Returns one row for each schema |

| 15. | TABLE_CONSTRAINTS | Returns one row for each table constraint |

| 16. | TABLE_PRIVILEGES | Returns one row for each table privilege that is granted to or granted by |

| 17. | TABLES | Returns one row for each table |

| 18. | VIEW_COLUMN_USAGE | Returns one row for each column that is used in a view definition |

| 19. | VIEW_TABLE_USAGE | Returns one row for each table that is used in a view |

| 20. | VIEWS | Returns one row for views |

As stated above, the information schema is available for each database, therefore the information schema views return only the records available in the current database to which the current user has access.

Notes:

1. Above their informative character, the INFORMATION_SCHEMA definitions could be used in order to understand more about the systems tables used as source for the respective views and the relations between them.

2. Microsoft recommends not to use the INFORMATION_SCHEMA views in order to determine the schema of an object and use instead the sys.objects system catalog view [2], warning that appears in several pages from information schema documentation.

3. As it seems the SQL Server 2008 doesn’t implement all the views defined in information schema, for example ASSERTIONS, CHARACTER_SETS, COLLATIONS, TRANSLATIONS, SQL_LANGUAGES, etc. It’s also interesting to note that the respective information are available in .Net through the OleDbSchemaGuid class (see also this).

References:

[1] ISO/IEC. (1992) ISO/IEC 9075:1992, Database Language SQL. [Online] Available from: http://www.contrib.andrew.cmu.edu/~shadow/sql/sql1992.txt (Accessed: 8 August 2010)

[2] MSDN. (2010). Querying the SQL Server System Catalog. [Online] Available from: http://msdn.microsoft.com/en-us/library/ms189082.aspx (Accessed: 9 August 2010)

07 August 2010

Database Design: Object Dependencies (Part I - An Introduction)

|

| Data Management Series |

Introduction

Around the various data islands existing in the blue and the models that support them are created a whole range of database objects (views, stored procedures, user-defined functions) and other type of non-database objects (classes, strong-typed datasets, reports, ad-hoc queries, etc.) With each reference to a database object is created a database dependency, or simply dependency, between the database object and the other objects that reference it, thus any change occurring in a database object could impact the various referents resulting in broken links, invalid calls or any type of error that might brake the calling applications or the isolated pieces of code (e.g. reports, ad-hoc queries, SQL scripts-based logic).

Tracking Database Dependencies

Many organizations use to document such dependencies in data dictionaries or any other type of similar documentation, one of the reasons being the easier identification of the objects that are impacted by the changes occurring in the database structure. One of the problems is that the documentation is often application-oriented, targeting thus the application using the data, and if there are multiple applications consuming the same data, then it’s not so easy to aggregate all the dependencies especially when they are stored in Excel files, dispersed documents, repositories, with (complicated) permissions access,

That’s one of the reasons for which an organization might consider storing in the source database as much of the business logic related directly to data. Encapsulating queries and procedural logic in views, stored procedures, user-defined functions or any other similar objects seems a good idea in order to reduce the maintenance of code, hide the complexity of a database from the consumers (users, services, web/desktop applications, etc.), and from several other considerations.

The most important of these considerations is the fact that databases store not only the respective objects and statistics about them, but could store also the dependencies between them, making easier the impact analysis or any type of analysis based on the dependency between objects. It’s at the discretion of developers, architects or any other type of professional with decision power on whether they want to take advantage of such functionality.

Foreign Key Constraints

The simplest and most natural dependence information to store are the primary-foreign key relations implemented in the form of a constraint. The foreign key constraints, as they are called, identify and enforce the relationship between two tables; “identify” because it makes the relation explicit, and “enforce” because it checks the validity of foreign-primary key values pairs when records are inserted, updated or deleted, enforcing thus the referential integrity of the database.

When a deletion is attempted on a record, the database engine checks if there is any dependent record (in the same or other table) that references the respective record, and if such a constraint is defined, the deletion is aborted raising also an error message. A check is performed also when a record is inserted or updated in the child table, the respective actions being aborted if the foreign key reference is not valid.

Conversely, a foreign key constraint could bring additional complexity during migration tasks, though with a little effort and a good architecture the overhead is minimized. In addition the foreign key constraints could be used by third party tools to provide some degree of automation when joining tables or for other purposes.

Object Dependencies

More complex dependency regard the dependency between database objects – views, stored procedures, user-defined functions or tables. In some cases is enough to see that there is a dependency between two objects, though in more complex situations would be useful to know which specific attribute is used from the dependency objects, especially when using the metadata for automation tasks. By creating the dependency tree, the tree of objects resulted from the dependency between the various database objects, it’s possible to provide more accurate impact assessments.

So until now were considered the dependencies between database objects, though, as highlighted above, there are many other objects stored outside of a database and referencing database objects. It makes sense to have a global repository in which to store information about dependencies, preferably in a relational database which could be easily interrogated using simple flat or hierarchical queries.

Metadata

The object definitions, statistics, dependencies and other type of information stored about data or the structures or objects related to data are encompassed under the denomination of metadata, which in common understanding is defined as “data about data”. The metadata could be used not only as input for impact analysis but also for automating business logic, functionality that opens new perspectives in development. Einstein’s believe that “problems cannot be solved by the same level of thinking that created them” is reflected in the world of databases by the fact that the metadata stored about database objects help to solved problems related to the objects and the data the databases contain. For example during a data migration project the two database structures could be mapped at table and attribute level, being possible thus to create validation rules in an automated manner.

Even if databases come with a predefined structure of storing metadata, the various solutions developed on top of such databases require additional metadata to be stored, and in theory it would be great if databases’ metadata structures could be extended for this purposes, though given the risks involved in altering such structures lead to the existence of parallel metadata repositories, in which an important percent of the database’s metadata are duplicated.

Beyond Database Dependencies

Talking about data mappings, integration projects and integration functionality/features rely heavily on data mappings, they involving a degree of automation too. Integration of data doesn’t necessarily occur only at application level, in the context of web’s evolution, the tendency is to link and integrate the various data islands (see linkeddata.org), especially the ones with public character, and provide thus cross-database functionality. Many of the problems such an approach implies are solved at metadata level, new metadata and dependency levels being required for this purpose.

Created: Aug-2010, Last Reviewed: Mar-2024

01 August 2010

💠🛠️SQL Server: Administration Part V: Resources for Troubleshooting I)

Last week the SQL Server troubleshooting-related topics came again on my table, and it’s actually quite an important topic. As I have disparate links I thought it makes sense to bring the links together in a post.

When searching for information it’s always a good idea to start with the documentation or the support site, in what concerns SQL Server troubleshooting Microsoft has several valuable resources on what concerns performance issues, application performance, ad-hoc queries, blocking, stored procedure recompilation, clusters, etc. Even if outdated, of interest could be also the Improving .Net Application Performance and Scalability resource, with a chapter on SQL Server Performance and ADO.NET. Other resources could be found in Technet, for example Troubleshooting Performance Problems in SQL Server 2005, a similar document being available on SQL Server 2008 from MSDN. As a way to avoid the need for troubleshooting, it makes sense to check also the SQL Server Best Practices.

There are several good books on this topic I heartily recommend, the first on my list is the book of C. Bolton et al, Professional SQL Server 2008 Internals and Troubleshooting. What’s interesting to note is that the book is available to browse and read on Scribd as part of a eBook Deal with Wiley, deal that includes several other programming and non-programming books (See Wiley’s profile on Scribd). The code for several chapters from the C. Bolton's book is available on Wiley’s website. Of interest are especially the chapters on DMV (dynamic management views) because performance troubleshooting often resumes in searching for hints in SQL Server internal tables. Another book, actually booklet, on DMV comes from Redgate, the SQL Server DMV Starter Pack, the pack being available also with a list of 30 sample scripts downloadable together as zip.

Talking about scripts, there are many scripts available on DMV from Technet’s Script Repository. If you want to get an overview of your SQL Server configuration and health, you could check Diagnostic Information Queries available from Glen Berry’s blog for SQL Server 2005, respectively SQL Server 2008 and 2008R2.

31 July 2010

💎SQL Reloaded: Self-Joins and Denormalized Data Loading in Normalized Models

Note:

Header data it’s improper said because an entity could contain more than 2 levels of data, for example the Purchase Orders (POs) in an ERP system could be split in PO Headers, Lines, Shipments and Distributions, thus a (denormalized) extract based on the respective data at Distribution level will contain repeating data from the higher levels.

So for this post I needed to find an entity that contains a parent-child or header-lines structure. Actually it’s not difficult to find such an example, the world of ERP systems is full of such examples – POs, Invoices, Customer Orders, Receipts, Payments, to mention some of the important transactional data, or Customer, Vendors and even the Items, when considering the master data. The difficulty is to simplify the example to a level that could be easier understood also by people who had less tangency with ERP systems or database models. For this I will consider the Receipts received when paying the goods in a (super-)market, if we take such a bill we could typically see the Receipt Number, the name of the Vendor from which we purchased was made, the Receipt Date, the Date when the purchase was made, the Items purchased together with the Quantity and Price. Such information could be easily entered in Excel and later loaded in a denormalized table, or enter them directly in the respective denormalized table:

-- Receipts denormalized table CREATE TABLE [dbo].[Receipts]( [ReceiptNumber] [nvarchar](50) NULL, [Vendor] [nvarchar](100) NULL, [ReceiptDate] [smalldatetime] NULL, [CurrencyCode] [nvarchar](3) NULL, [ItemNumber] [nvarchar] (50) NULL, [Quantity] [decimal](12, 3) NULL, [Price] [numeric](12, 2) NULL ) ON [PRIMARY] -- inserting test data INSERT INTO dbo.Receipts VALUES ('012034', 'TOOM', '2010-06-23', 'EUR', 'KABANOS PARIKA', 1, 2.19) , ('012034', 'TOOM', '2010-06-23', 'EUR', 'ZITRONE', 1, 0.79) , ('012034', 'TOOM', '2010-06-23', 'EUR', 'BREAKFAST BAKON', 1, 1.59) , ('7899998', 'KAUFHOFF', '2010-06-22', 'EUR', 'PILLOW', 1, 23.99) , ('7899998', 'KAUFHOFF', '2010-06-22', 'EUR', 'BED SHEET', 2, 11.99) -- checking the data SELECT * FROM dbo.Receipts

Supposing we have the above data and that we would like to load them in a normalized structure formed from the Header information – Receipt Number, Vendor Name and Receipt Date, and Line information – Item Number, Quantity and Price:

-- Receipt Headers (normalized) CREATE TABLE [dbo].[ReceiptHeaders]( [ReceiptHeaderID] [int]IDENTITY(1,1) NOT NULL, [ReceiptNumber] [nvarchar](50) NULL, [Vendor] [nvarchar](100) NULL, [ReceiptDate] [smalldatetime]NULL, [CurrencyCode] [nvarchar](3) NULL ) ON [PRIMARY] -- Receipt Lines (normalized)

CREATE TABLE [dbo].[ReceiptLines]( [ReceiptLineID] [int]IDENTITY(1,1) NOT NULL, [ReceiptHeaderID] int NOT NULL, [ItemNumber] [nvarchar] (50) NULL, [Quantity] [decimal] (12, 3) NULL, [Price] [numeric](12, 2) NULL ) ON [PRIMARY]

In order to load the denormalized data in a normalized structure we could write two queries, the first populates the ReceiptHeaders table, for this needing to select the distinct header attributes that make the header, while the second populates the ReceiptLines table:

-- inserting the Receipt Header data INSERT INTO dbo.ReceiptHeaders SELECT DISTINCT R.ReceiptNumber , R.Vendor , R.ReceiptDate , R.CurrencyCode FROM dbo.Receipts R -- inserting the Receipt Lines data

INSERT INTO dbo.ReceiptLines SELECT RH.ReceiptHeaderID , R.ItemNumber , SUM(R.Quantity) Quantity , R.Price FROM dbo.Receipts R JOIN dbo.ReceiptHeaders RH ON R.ReceiptNumber = RH.ReceiptNumber AND R.Vendor = RH.Vendor GROUP BY RH.ReceiptHeaderID , R.ItemNumber , R.Price

As can be seen from the second query, the Receipts table was joined with the ReceiptHeaders in order to retrieve the corresponding Header information for each line record. For this action to be possible we need an attribute or combination of attributes unique across the header data, in this case the ReceiptNumber in combination with the Vendor Name. If no such unique combination exists then the match between header and line data is not possible without resulting duplicated data, in such scenario it’s recommended to clean the data before loading them, for example by introducing an attribute that makes the combination unique. The same problem of uniqueness could be applied to the lines too, needing to be possible to identify uniquely a line in the source dataset. This could be done for example by introducing a Line Number in the source dataset or, as in this case, in case there are multiple lines with the same information then we could aggregate the quantities for the respective lines, the Price being moved in the GROUP BY clause as in theory the products with the same Item Number bought at the same time have the same price (that doesn’t necessarily happen in reality though for our exemplification will do).

After inserting the data in the normalized model it makes sense to check the logic by comparing the data inserted against the initial dataset. It’s always a good idea to do that, in this way could be trapped for example the errors in logic. In Excel for SQL Developers – Part IV: Differences Between Two Datasets I shown how the query for comparing two datasets could be created in a semiautomatic manner and shown also the resulting query. A similar query could be written also in this case, for this purpose being useful to create a view which denormalizes our structure:

-- Receipts View CREATE VIEW dbo.vReceipts AS SELECT RL.ReceiptLineID , RL.ReceiptHeaderID , RH.ReceiptNumber , RH.Vendor , RH.ReceiptDate , RH.CurrencyCode , RL.ItemNumber , RL.Quantity , RL.Price FROM dbo.ReceiptLines RL JOIN dbo.ReceiptHeaders RH ON RL.ReceiptHeaderID = RH.ReceiptHeaderID -- testing the view & updates SELECT * FROM dbo.vReceipts

Until now we made it to load the data from a denormalized structure but no trace of a self-join! In many cases writing queries similar with the above ones is enough, though there are many cases when is needed to load the data successively, either incrementally or complete datasets. In both situations we could deal with data already loaded, so we have to avoid entering duplicates. Now it comes the self join into play, because in both insert queries we have to remove the records already loaded. Even if we deal with incremental data that form partitions (any record is provided only once) it’s safer and recommended to check for possible records provided again. So, we’ll have to modify the above two inserts to ignore the records already loaded:

-- inserting the Receipt Header data (with checking for loaded data) INSERT INTO dbo.ReceiptHeaders SELECT DISTINCT R.ReceiptNumber , R.Vendor , R.ReceiptDate , R.CurrencyCode FROM dbo.Receipts R LEFT JOIN dbo.ReceiptHeaders RH ON R.ReceiptNumber = RH.ReceiptNumber AND R.Vendor = RH.Vendor WHERE RH.ReceiptNumber IS NULL -- inserting the Receipt Lines data (with checking for loaded data) INSERT INTO dbo.ReceiptLines SELECT RH.ReceiptHeaderID , R.ItemNumber , SUM(R.Quantity) Quantity , R.Price FROM dbo.Receipts R JOIN dbo.ReceiptHeaders RH ON R.ReceiptNumber = RH.ReceiptNumber AND R.Vendor = RH.Vendor LEFT JOIN dbo.vReceipts VR ON R.ReceiptNumber = VR.ReceiptNumber AND R.Vendor = RH.Vendor AND R.ItemNumber = VR.ItemNumber WHERE VR.ReceiptNumber IS NULL GROUP BY RH.ReceiptHeaderID , R.ItemNumber , R.Price

I hope the queries are easy to be understood, if not then might be a good idea to check the posts on left joins. In order to test the queries let’s insert new data in the Receipts table:

-- inserting new test data INSERT INTO dbo.Receipts VALUES ('012455', 'TOOM', '2010-07-25', 'EUR', 'KABANOS PARIKA', 1, 2.20) , ('012455', 'TOOM', '2010-07-25', 'EUR', 'ZITRONE', 1, 0.79)

After running the three inserts the same data should be available in input denormalized and normalized structures:

-- testing the updates SELECT * FROM dbo.vReceipts

Notes:

In this post wasn’t considered the case in which the data are modified between loads, thus excepting the above inserts must be written also two update statements that should reflect the changes occurred in loaded data. I will try to approach this subject in another post.

#️⃣Software Engineering: Programming (Part IV: Believe and Not Doubt?!)

|

| Software Engineering Series |

Please note that I’m saying “not following blindly”, this doesn’t mean that you shouldn’t listen and follow the advices of other professionals, on contrary, I recommend to sip their knowledge and words of wisdom, though try to understand the reasons behind the techniques used, primarily try to understand the benefits and downsides of each technique or feature, understand why, how, when, where, who and by what means. Without understanding these aspects, the information presented can’t rise to the level of knowledge and therefore it won’t reach the potential value it might have for you and other professionals.

Don’t be confused of the various contradictions and interpretations met in the world, the truth is relative, and you’ll often hear experts saying simply “it depends…” - is just a syntagma to highlight the complexity of things, the dependence of the solution on the various parameters. Even if you don’t agree with others’ perspective be indulgent with them and yourself, there are various opinions/beliefs, balance their points of view and yours, somewhere in the middle is the true…

30 July 2010

💎SQL Reloaded: Self-Join in Update Query II

--Employee table's script

CREATE TABLE [dbo].[Employees](

[EmployeeID] [int] NOT NULL

, [ManagerID] [int] NULL

, [Name] [nvarchar] (50) NULL

, [Performance] [numeric] (5, 3) NULL

, [TrainingHours] [numeric] (5, 2) NULL )

ON [PRIMARY]

-- inserting the test data

INSERT INTO dbo.Employees

VALUES (1, NULL, 'Joe', NULL, 10)

, (2, 1, 'Jack', .65, NULL)

, (3, 1, 'Mary', .45, NULL)

, (4, 1, 'Ross', .54, NULL)

, (5, 1, 'Scott', .48, NULL)

, (6, NULL, 'Jane', NULL, 15)

, (7, 6, 'Sam', .50, NULL)

, (8, 6, 'Ron', .45, NULL)

In order to calculate Manager’s performance we could use a query similar with the following, of course the formula could be more complicated and not a simple average:

-- updating Manager's Performance

UPDATE dbo.Employees

SET Performance = EMP.Performance

FROM ( -- inline view

SELECT ManagerID

, AVG(Performance) Performance

FROM dbo.Employees

WHERE ManagerID IS NOT NULL

GROUP BY ManagerID

) EMP

WHERE dbo.Employees.ManagerID IS NULL

AND dbo.Employees.EmployeeID = EMP.ManagerID

Let’s check the updates and their correctitude:

-- Checking updated data SELECT * FROM dbo.Employees WHERE ManagerID IS NULL -- Verifying output SELECT ManagerID , AVG(Performance) Performance FROM dbo.Employees WHERE ManagerID IS NOT NULL GROUP BY ManagerID

Note:

The average needs to be calculated in the inline view. In case are retrieved more records for each record from the updated table, the query will still work though the result is “unpredictable”:

-- updating Manager's Performance w/o aggregates UPDATE dbo.Employees SET Performance = EMP.Performance FROM ( -- inline view SELECT ManagerID , Performance FROM dbo.Employees WHERE ManagerID IS NOT NULL ) EMP WHERE dbo.Employees.ManagerID IS NULL AND dbo.Employees.EmployeeID = EMP.ManagerID

The reverse update based on the number of hours of training could be written as follows:

-- updating Employees' Training Hours UPDATE dbo.Employees SET TrainingHours = 0.75 * EMP.TrainingHours FROM ( -- inline view SELECT EmployeeID , TrainingHours FROM dbo.Employees WHERE ManagerID IS NULL ) EMP WHERE dbo.Employees.ManagerID IS NOT NULL AND dbo.Employees.ManagerID = EMP.EmployeeID -- Checking updated data SELECT * FROM dbo.Employees WHERE ManagerID IS NOT NULL -- Verifying output SELECT EmployeeID , 0.75 * TrainingHours TrainingHours FROM dbo.Employees WHERE ManagerID IS NULL

About Me

- Adrian

- Koeln, NRW, Germany

- IT Professional with more than 25 years experience in IT in the area of full life-cycle of Web/Desktop/Database Applications Development, Software Engineering, Consultancy, Data Management, Data Quality, Data Migrations, Reporting, ERP implementations & support, Team/Project/IT Management, etc.