|

| ERP Systems |

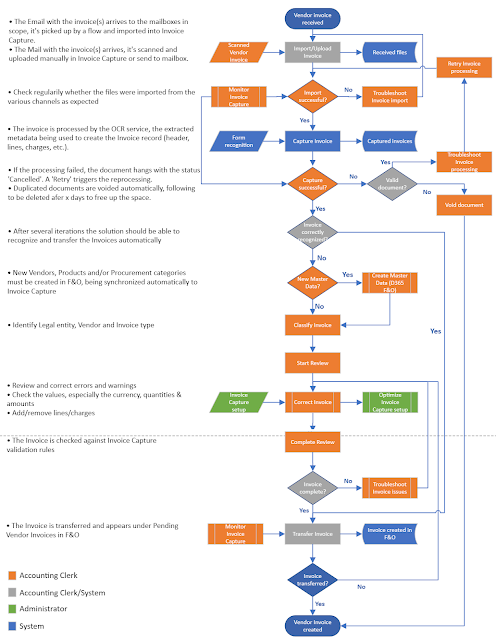

When implementing the Invoice Capture process, there are several setup areas that need to be considered into the Power App (Channels & Mapping Rules), some that need to be done in Dynamics 365 for Finance and Operations (D365 F&O) and impact the integration with the Power App (Expense types/Procurement categories, Financial dimensions, Approval workflow), respectively setup that concerns only D365 F&O but still important. In addition, there are also overlapping features like Recurring Vendor Invoice Templates and E-Invoicing.

This post focuses on the first two types of setup areas, the rest following in a second post.

Channels

In the digitalization era, it's expected that most of the Vendor invoices are received by email or an integrated way (see e-Invoicing). However there can be also several exceptions (e.g. small companies operating mostly offline).

In Invoice Capture can be defined one or more channels for Outlook, SharePoint or OneDrive on which Invoices can be imported in the App. In a common scenario, depending on the number of mailboxes intended for use, one or more channels can be set up for Outlook. Power App listens on the respective channels for incoming emails in the Inbox, in a first step processes the emails by importing all the attachments (including signature images), while in a second step processes all the documents that look like an Invoice (including Receipts) and extracts the metadata to create the Invoice record. The Invoices received in hard-paper format can be scanned and sent to one of the internal mailboxes, respectively imported manually in Invoice Capture.

More likely, there will be also a backlog that can be imported via SharePoint or OneDrive, which is more convenient than resending the backlog by email. In the end it should make no difference for the process which channels is used as long as the Invoices are processed in a timely manner. It should make also no difference if for example in UAT were used other channels than in Production. For testing purposes it might be advantageous to have more control over which Invoices are processed, while the UAT could follow the same setup as Prod, which is generally recommended.

Mapping Rules

The mapping rules allow on one side to set default values based on a matching string checked against several attributes (e.g. Company name, Address or Tax registration number for Legal entity, Item number for Item, Item description for Expense type). Secondly, they allow to also define a configuration for a rule, which defines what fields are mandatory, respectively which Invoice types are supported, etc.

The mapping rules will not cover all scenarios, though it's enough if they cover a good percentage from the most common cases. Therefore, over time they are also good candidates for further optimization. Moreover, because Invoice Capture remembers the values used before for an instance of the same Invoice, the mapping rules will be considered only for the first occurrence of the respective Invoice or whatever is new in its processing.

If a channel was defined for each Legal entity, this seems to make obsolete the definition of mapping rules for it. Conversely, if the number of manual uploads is not neglectable, it still makes sense to define a mapping rule.

Mapping rules for the Expense type seem to work well when Items' descriptions are general enough to include certain words (e.g. licenses, utilities).

One can define mapping rules also for the Vendor accounts and Items, though it's questionable whether the effort makes sense as long as the internal Vendor names and Product numbers don't deviate from the ones used by the Vendor itself.

Expense Types

Invoice Capture requires that either the Item or the Expense type are provided on the line. For PO-based invoices, an Item should be available. Cost invoices can have Items as well and they can be used on the line, though from the point of view of the setup it might be easier to use Expense types. It's the question whether the information loss has any major impact on the business. There are also cases in which the lines don't bring any benefit and can be thus in Invoice capture deleted.

At least for Cost invoices, the Expense types (aka Procurement categories in Dynamics 365) defined can considerably facilitate the automatic processing. D365 F&O can use the Procurement category to automatically populate the Main Account in the Invoice distributions. The value is used as default and can be overwritten, if needed.

Having for example a 1:1 mapping between Procurement categories and Main accounts, respectively the same names can make easier the work of AP Clerks and facilitate the troubleshooting.

Conversely, one can define an additional level of detail (aka an additional segment) for reporting purposes. This implies that multiple categories will point to the same Main account, which can increase the overhead, though the complexity of the structure can be simplified by using maybe a good naming convention and a consolidated Excel list with the values. The overhead resumes mainly when dealing with the first instance of an Vendor invoice.

On Procurement categories can be defined also the default Item sales tax groups (a 1:1 mapping) which can be overwritten as well. For the categories with multiple Items sales tax groups, one should decide whether the benefit of providing a default value outweighs the effort for adding the value for each Invoice line.

Defining upfront, before the Go Live, a good hierarchical structure for the Procurement categories and the mappings to the Main accounts, respectively to the Item sales tax groups can reduce the effort of maintaining the structure later and reduces the reporting overhead.

Financial Dimensions & the Vendor Invoice Approval Workflow

Besides their general use, the Financial dimensions can be used to implement an approval process on D365 F&O side by configuring an expenditure reviewer (see [2]) and using it in the Vendor Invoice Approval workflow, respectively of setting up the financial owners for each dimension in scope. Different owners for the Financial dimensions can be defined for each Legal entity via Legal entity overrides. From what it seems, notifications are sent then to the override as well to the default owner.

Starting with the 1.1.0.32 (07-Nov-2023) version of Invoice capture, respectively the 10.0.39 version of D365 F&O (planned for Apr-2024), 3 financial dimensions (Cost center, Department and Legal Entity) are supported directly in the App. This would allow us to cover the example covered in [2]). This reduces the need for maintaining the values in D365 F&O.

Unfortunately, if the approval process needs to use further dimensions (e.g. Vendor, Location) or attributes (e.g. Invoice responsible person) in the approval, one needs either to compromise or find workarounds. If there's no Purchase order as in the case of Cost invoices, involving the actual Buyer is almost impossible. For such extreme cases one needs more flexibility in the approval process and hopefully Microsoft will extend the functionality behind it.

The approval processes needed by customers require occasionally a complexity that's not achievable with the functionality available in D365 for F&O. The customers are forced then either to compromise or use external tools (e.g. Power Automate) for building the respective functionality.

One should consider defining default Financial dimensions on the Vendor, the respective values being used when generating the Invoice in D365 F&O. Defining Financial dimensions templates can help as well when the costs need to be split across different Financial dimensions based on percentages.

In case the financial dimensions differ across a same Vendor's invoices, one can request from the Vendors to provide the respective information on the Invoice.

Organization Administration

Cost invoices can work without providing a Unit of Measure (UoM) in Invoice capture, however one should consider using an UoM at least for aesthetic purposes in reporting. On the other side, this can complicate the setup if the same UoM is used for other purposes.

Vendor Invoice Automation

To be able to process automatically the Vendor invoices once they arrived in D365 F&O, it would useful to maintain the attributes which are modified manually in D365 F&O directly in Invoice Capture. The usual fields are the following:

- Invoice description (header)

- Financial dimensions

- Sales item tax group

Master Data Management

There are master data attributes which even if they are not directly involved in the process, they could make process actor's life a bit easier. It can be the case of the Responsible person and/or the Buyer group, which would allow the AP Clerks to identify the Cost Center and Department related to the Invoice. Maintaining the external Item descriptions for Vendors can help as well in certain scenarios.

Previous post <<||>> Next post

Resources:

[1] Microsoft Learn (2023) Invoice capture overview (link)

[2] Microsoft Learn (2023) Configure expenditure reviewers (link)