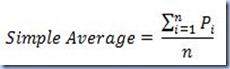

-- Oracle/SQL Server: Simple Averages SELECT ProductID , AVG(UnitPrice) AverageUnitPrice , SUM(UnitPrice)/COUNT(1) AverageUnitPriceDef FROM Purchasing.PurchaseOrderDetail GROUP BY ProductID

For most of the data analysis requirements the simple average function would be enough, though there are cases in which is required to address the sensitivity of values to certain modifiers – for example quantities or the number of lines. For such cases is used the weighted average (weighted arithmetic mean) calculated as the sum of values and quantifiers products, the total being divided by the sum of quantifiers:

Note:

In case the quantifiers are all 1 then the weighted average equates with the simple average, the output being the same also when the values are constant.

The weighted average is quite a useful tool when performing PO Price Analysis in which often needs to be be considered also the volume of purchased quantities with a given Price Unit:

-- Oracle/SQL Server: Simple/Weighted Averages SELECT ProductID , AVG(UnitPrice) AverageUnitPrice , SUM(UnitPrice*OrderQty)/SUM(OrderQty) WeightedAverageUnitPrice FROM Purchasing.PurchaseOrderDetail GROUP BY ProductID

The actual implementation in a query might differ based on requirements and preferences – as multiple left joins, inline view or correlated query, each of the mentioned approaches coming with their downsides and strengths:

-- Oracle/SQL Server: Averages (inline view) SELECT ITM.ProductID , ITM.Name ProductName , ITM.ProductNumber , DAT.AverageUnitPrice , DAT.WeightedAverageUnitPrice FROM Production.Product ITM LEFT JOIN ( --calculated averages SELECT POD.ProductID , AVG(POD.UnitPrice) AverageUnitPrice , SUM(POD.UnitPrice*OrderQty)/SUM(POD.OrderQty) WeightedAverageUnitPrice FROM Purchasing.PurchaseOrderDetail POD JOIN Purchasing.PurchaseOrderHeader POH ON POD.PurchaseOrderID = POH.PurchaseOrderID WHERE POH.Status IN (2, 4) -- 2-Approved, 4-Complete GROUP BY POD.ProductID)DAT ON ITM.ProductID = DAT.ProductID ORDER BY ITM.Name -- Oracle/SQL Server: Averages (multiple left joins) SELECT ITM.ProductID , ITM.Name ProductName , ITM.ProductNumber , AVG(POD.UnitPrice) AverageUnitPrice , SUM(POD.UnitPrice*OrderQty)/SUM(POD.OrderQty) WeightedAverageUnitPrice FROM Production.Product ITM LEFT JOIN Purchasing.PurchaseOrderDetail POD ON ITM.ProductID = POD.ProductID LEFT JOIN Purchasing.PurchaseOrderHeader POH ON POD.PurchaseOrderID = POH.PurchaseOrderID WHERE POH.Status IN (2, 4) -- 2-Approved, 4-Complete GROUP BY ITM.ProductID ,ITM.Name ,ITM.ProductNumber ORDER BY ITM.Name -- SQL Server 2005: Averages (OUTER APPLY) SELECT ITM.ProductID , ITM.Name ProductName , ITM.ProductNumber , DAT.AverageUnitPrice , DAT.WeightedAverageUnitPrice FROM Production.Product ITM OUTER APPLY ( -- calculated averages SELECT AVG(POD.UnitPrice) AverageUnitPrice , SUM(POD.UnitPrice*OrderQty)/SUM(POD.OrderQty) WeightedAverageUnitPrice FROM Purchasing.PurchaseOrderDetail POD JOIN Purchasing.PurchaseOrderHeader POH ON POD.PurchaseOrderID = POH.PurchaseOrderID WHERE ITM.ProductID = POD.ProductID AND POH.Status IN (2, 4) -- 2-Approved, 4-Complete ) DAT ORDER BY ITM.Name

A different type of approach could be focused on calculating the simple or weighted average based only on the last n PO Lines, for example the last 3 PO Lines:

-- Oracle/SQL Server 2005: last 3 PO average prices SELECT DAT.ProductID , MAX(DAT.RANKING) NumberRecords , AVG(DAT.UnitPrice) AveragePrice , SUM(DAT.UnitPrice* DAT.OrderQty)/SUM(DAT.OrderQty) WeightedAveragePrice FROM (-- ranked PO Prices SELECT POD.ProductID , POD.UnitPrice , POD.OrderQty , RANK() OVER(PARTITION BY POD.ProductID ORDER BY POH.OrderDate DESC, POD.PurchaseOrderDetailID DESC) RANKING FROM Purchasing.PurchaseOrderDetail POD JOIN Purchasing.PurchaseOrderHeader POH ON POD.PurchaseOrderID = POH.PurchaseOrderID WHERE POH.Status IN (2, 4) -- 2-Approved, 4-Complete ) DAT WHERE DAT.RANKING BETWEEN 1 AND 3 GROUP BY DAT.ProductID

The problem could be complicated even more by selecting all the POs corresponding to the last 3 distinct Prices used; not sure if it brings any value but the formulation is perfectly valid and don’t despair if a user comes with such a request.

Occasionally it might be needed to study the evolution of prices over time, the previous problem becoming a simple moving average or weighted moving average problem, in which the average is calculated in report with a moving point on the time scale with respect to the previous k points or concerning the previous and following k points including the current point:

G. Priester shows in his Calculating Moving Averages with T-SQL article a nice solution for simple moving averages calculation exemplifying it on stock market data. Frankly, given its flexibility, I prefer to use the CROSS APPLY operator in combination with fGetDatesInInterval function introduced in More Date-related Functionality – Part I post, the function returning all the dates falling in a certain interval. Because in case of POs there could be multiple transactions per day for the same Product, the problem is slightly changed, the averages being based within a 31 window:

-- SQL Server 2005: 31 days moving averages SELECT ITM.ProductNumber , ITM.Name ProductName , DIN.DateSequence ReportingDate , DAT.AverageUnitPrice , DAT.WeightedAverageUnitPrice FROM dbo.fGetDatesInInterval('2003-01-01', '2003-12-31') DIN CROSS APPLY (--calculated averages SELECT POD.ProductID , AVG(POD.UnitPrice) AverageUnitPrice , SUM(POD.UnitPrice*OrderQty)/SUM(POD.OrderQty) WeightedAverageUnitPrice FROM Purchasing.PurchaseOrderDetail POD JOIN Purchasing.PurchaseOrderHeader POH ON POD.PurchaseOrderID = POH.PurchaseOrderID WHERE DATEDIFF(d, POH.OrderDate, DIN.DateSequence) BETWEEN 0 AND 30 -- 31 days --WHERE DATEDIFF(d, POH.OrderDate, DIN.DateSequence) BETWEEN -15 AND 15 -- 31 days AND POH.Status IN (2, 4) -- 2-Approved, 4-Complete GROUP BY POD.ProductID) DAT LEFT JOIN Production.Product ITM ON DAT.ProductID = ITM.ProductID WHERE DATEPART(dw, DIN.DateSequence) BETWEEN 2 AND 6 -- Working Days ORDER BY ITM.Name , DIN.DateSequence