A Software Engineer and data professional's blog on SQL, data, databases, data architectures, data management, programming, Software Engineering, Project Management, ERP implementation and other IT related topics.

Pages

- 🏠Home

- 🗃️Definitions

- 🔢SQL Server

- 🎞️SQL Server: VoD

- 🏭Fabric

- 🎞️Fabric: VoD

- ⚡Power BI

- 🎞️Power BI: VoD

- 📚Data

- 📚Engineering

- 📚Management

- 📚SQL Server

- 🎞️D365: VoD

- 📚Systems Thinking

- ✂...Quotes

- 🧾D365: GL

- 💸D365: AP

- 💰D365: AR

- 🏠D365: FA

- 👥D365: HR

- ⛓️D365: SCM

- 🔤Acronyms

- 🪢Experts

- 🔠Dataviz & BI

- 🔠D365

- 🔠Fabric

- 🔠Engineering

- 🔠Management

- 🔡Glossary

- 🌐Resources

- 🏺Dataviz

- 🗺️Social

- 📅Events

- ℹ️ About

12 February 2010

🕋Data Warehousing: Operational Data Store (Definitions)

💎SQL Reloaded: Ways of Looking at Data V (Hierarchies)

-- BOM Top Most Items CREATE FUNCTION dbo.BOMTopMostItems( @ProductID int , @Date datetime) RETURNS @BOM TABLE( BillOfMaterialsID int NULL, ProductAssemblyID int NULL, ComponentID int NOT NULL, ModifiedDate datetime NOT NULL, Level smallint NOT NULL) AS BEGIN DECLARE @NoRecords int -- stored the number of Products part of an Assembly DECLARE @Level smallint SET @Date = ISNull(@Date, GetDate()) SET @Level = 0 -- initializing the routine

INSERT @BOM(BillOfMaterialsID, ProductAssemblyID, ComponentID, ModifiedDate, Level) SELECT BillOfMaterialsID, ProductAssemblyID, ComponentID, ModifiedDate, @Level FROM Production.BillOfMaterials WHERE ComponentID = @ProductID AND DATEDIFF(d, IsNull(EndDate, GetDate()), @Date)<=0 SELECT @NoRecords = count(1) FROM @BOM WHERE ProductAssemblyID IS NOT NULL WHILE (IsNull(@NoRecords, 0)>1) BEGIN -- inserting next level INSERT @BOM(BillOfMaterialsID, ProductAssemblyID, ComponentID, ModifiedDate, Level) SELECT BOM.BillOfMaterialsID, BOM.ProductAssemblyID, BOM.ComponentID, BOM.ModifiedDate, @Level+1 FROM @BOM B JOIN Production.BillOfMaterials BOM ON B.ProductAssemblyID = BOM.ComponentID AND DATEDIFF(d, IsNull(BOM.EndDate, GetDate()), @Date)<=0 WHERE Level = @Level SET @Level = @Level+1 SELECT @NoRecords = count(1) FROM @BOM WHERE ProductAssemblyID IS NOT NULL AND Level = @Level

END -- deleting the intermediate levels

DELETE FROM @BOM WHERE ProductAssemblyID IS NOT NULL RETURN END

As can be seen I’m first inserting the BOM information of the Product searched, then based on these data I’m taking and inserting the next level, stopping when the top-most items where identified, here ProductAssemblyID IS NOT NULL, in the end deleting the intermediate levels. The same approach could be used to generate the whole hierarchy, in this case starting from the top.

Here is an example based on the above function, I used NULL in the second parameter because the @date parameter is set to the current date if not provided.

SELECT * FROM dbo.BOMTopMostItems(486, NULL)

When checking the data returned I observed that for the given ProductID the same BillOfMaterialsID appears more than once, this because, as can be seen by generating the whole BOM for one of the respective duplicated ComponentID, the same ProductID could appear in a BOM at different levels or at the same level but belonging to different assemblies. Therefore in order to get only the list of top-most items you could use a DISTINCT:

SELECT DISTINCT ComponentID FROM dbo.BOMTopMostItems(486, NULL)

On the other side if we want to remove the duplicates resulted from Products appearing at the same level of the same BOM but for different assemblies, could be used a DISTINCT when inserting into the @BOM table or, even if not recommended, use a DISTINCT when calling the function:

SELECT DISTINCT BillOfMaterialsID , ProductAssemblyID , ComponentID , ModifiedDate , Level FROM dbo.BOMTopMostItems(486, NULL)

11 February 2010

🕋Data Warehousing: Staging Area (Definitions)

"The staging area is where data from the operational systems is first brought together. It is an informally designed and maintained grouping of data that may or may not have persistence beyond the load process." (Claudia Imhoff et al, "Mastering Data Warehouse Design", 2003)

"A database design used to preprocess data before loading it into a different structure." (Sharon Allen & Evan Terry, "Beginning Relational Data Modeling" 2nd Ed., 2005)

"A place where data in transit is placed, usually coming from the legacy environment prior to entering the ETL layer of processing." (William H Inmon, "Building the Data Warehouse", 2005)

"Place where data is stored while it is being prepared for use, typically where data used by ETL processes is stored. This may encompass everything from where the data is extracted from its original source until it is loaded into presentation servers for end user access. It may also be where data is stored to prepare it for loading into a normalized data warehouse." (Laura Reeves, "A Manager's Guide to Data Warehousing", 2009)

[initial staging area:] "The area where the copy of the data from sources persists as a result of the extract/data movement process. (Data from real-time sources that is intended for real-time targets only is not passed through extract/data movement and does not land in the initial staging area.) The major purpose for the initial staging area is to persist source data in nonvolatile storage to achieve the “pull it once from source” goal." (Anthony D Giordano, "Data Integration Blueprint and Modeling", 2010)

"A location where data that is to be transformed is held in abeyance waiting for other events to occur" (Daniel Linstedt & W H Inmon, "Data Architecture: A Primer for the Data Scientist", 2014)

"An area for the provision of data, where data is stored temporarily for validation and correction before it will be imported into the target database. A staging area thereby provides technical support for the first time right principle of data management." (Boris Otto & Hubert Österle, "Corporate Data Quality", 2015)

10 February 2010

⌛PL/SQL Reloaded: Hierarchies (The Oracle Solution)

The START WITH ASS.productassemblyid IS NULL and TRUNC(NVL(ass.enddate, SYSDATE))>=TRUNC(SYSDATE) constraints function as initialization for the recursive logic, being ignore in next iterations, while the CONNECT BY PRIOR ass.componentid = ass.productassemblyid and TRUNC(NVL(ass.enddate, SYSDATE))>=TRUNC(SYSDATE)

constraints function as join constraints between the prior result set and current logic. Maybe somebody is asking why the ‘Active BOM’ constraint if specified in both START WITH, respectively CONNECT BY clauses and not in the WHERE clause? If we are interested only in the Active BOMs then the corresponding constraint must be added to the initiating clause, and is added to the CONNECT BY clause because we need to assure that we take only the active children. If we move the ‘Active BOM’ constrain from the CONNECT BY to WHERE clause then most probably we’ll get a totally different number of records, respectively 9525 vs. 8730 how much the above query returns. The easiest way to check whether the logic is correct is to do include the Activer ecords in an inline view and construct with it the BOM.

The START WITH ASS.productassemblyid IS NULL and TRUNC(NVL(ass.enddate, SYSDATE))>=TRUNC(SYSDATE) constraints function as initialization for the recursive logic, being ignore in next iterations, while the CONNECT BY PRIOR ass.componentid = ass.productassemblyid and TRUNC(NVL(ass.enddate, SYSDATE))>=TRUNC(SYSDATE)

constraints function as join constraints between the prior result set and current logic. Maybe somebody is asking why the ‘Active BOM’ constraint if specified in both START WITH, respectively CONNECT BY clauses and not in the WHERE clause? If we are interested only in the Active BOMs then the corresponding constraint must be added to the initiating clause, and is added to the CONNECT BY clause because we need to assure that we take only the active children. If we move the ‘Active BOM’ constrain from the CONNECT BY to WHERE clause then most probably we’ll get a totally different number of records, respectively 9525 vs. 8730 how much the above query returns. The easiest way to check whether the logic is correct is to do include the Activer ecords in an inline view and construct with it the BOM.

As the above query returns 8730 records it seems that the above logic is correct.

As the above query returns 8730 records it seems that the above logic is correct.

In order to match the ‘CTE-Based BOM structure’ query from the previous post, the Oracle query needs several further changes, namely to construct the Path, add the top-most ComponentID, calculate the TotalQty, and add the Product details. The Path can be constructed using the SYS_CONNECT_BY_PATH function using as parameters the attribute needed to be concatenated (e.g. componentid) and the delimiter (e.g. ‘\’). The top-most ComponentID can be retrieved using the CONNECT_BY_ROOT operator. Unfortunately Oracle doesn’t provides a straight way to calculate the TotalQty, though there is an alternative, using again the SYS_CONNECT_BY_PATH function with the PerAssemblyQty attribute and multiplication sign ‘*’ as parameters, this smart trick allowing to evaluate the final expression (e.g. ‘1*1*2*5’ will be evaluated to 10); because the extract is typically saved to excel, the final concatenation could be written as an Excel formula expression (e.g. ‘=1*1*2*5’). Here is the final query:

The specification of an ORDER BY is not always required because typically the output of a hierarchical query in Oracle is sorted as if it were used the depth-first search, though if I remember correctly there are exceptions too.

The specification of an ORDER BY is not always required because typically the output of a hierarchical query in Oracle is sorted as if it were used the depth-first search, though if I remember correctly there are exceptions too.

Sometimes it’s useful to create a concatenation of Products in order to see the actual structure of the BOM (e.g. ‘\BK-R93R-62\BB-9108\BE-2349\BA-8327’) or to use to use incremental spaces instead (see. IndentPath), for this needing to bring the Product join for ComponentID into the recursive logic.

By comparing the SQL Server vs. Oracle implementation for hierarchical queries, I would say that Oracle’s implementation is much simpler to use from the creational point of view with a trade off in functionality, several functions being required in order to extract information from top-most record or to concatenate attributes. On the other side SQL Server provides a more elegant solution that allows more functional flexibility in the detriment of code duplication – the anchor vs. the recursive member.

By comparing the SQL Server vs. Oracle implementation for hierarchical queries, I would say that Oracle’s implementation is much simpler to use from the creational point of view with a trade off in functionality, several functions being required in order to extract information from top-most record or to concatenate attributes. On the other side SQL Server provides a more elegant solution that allows more functional flexibility in the detriment of code duplication – the anchor vs. the recursive member.

Note:

In case you need to load the Product and BillOfMaterials tables in Oracle, you could do this by using the ‘SQL Server Import and Export Wizard’, for the steps see the SQL Server to Oracle Data Export – Second Magic Class tutorial.

09 February 2010

🕋Data Warehousing: Role-playing Dimension (Definitions)

"A dimension for which multiple foreign key columns exist in a single fact table to represent different contexts for the same dimension. For example, time is a common role-playing dimension with each date in the fact table associated with a different event, such as order date, ship date, and delivery date." (Reed Jacobsen & Stacia Misner, "Microsoft SQL Server 2005 Analysis Services Step by Step", 2006)

"Instances of a dimension that legitimately has more than one value for a given business transaction, such as order date and shipped date. Each attribute with the dimension is uniquely identified to enable easy differentiation between the different roles, such as Order Date, Order Quarter and Shipped Date, and Shipped Quarter." (Laura Reeves, "A Manager's Guide to Data Warehousing", 2009)

"A single database dimension joined to the fact table on a different foreign keys to produce multiple cube dimensions." (Microsoft, "SQL Server 2012 Glossary", 2012)

"The situation where a single physical dimension table appears several times in a single fact table. Each of the dimension roles is represented as a separate logical table with unique column names through views." (Ralph Kimball & Margy Ross, "The Data Warehouse Toolkit" 2nd Ed., 2002)

🕋Data Warehousing: Dimensional Model (Definitions)

"A dimensional model is a form of data modeling that packages data according to specific business queries and processes. The goals are business user understandability and multidimensional query performance." (Claudia Imhoff et al, "Mastering Data Warehouse Design", 2003)

"A data model influenced by performance considerations and optimized for data access and retrieval. This is a form of entity-relationship (ER) modeling that’s limited to working with “measurement” types of subjects and has a prescribed set of allowable structures." (Sharon Allen & Evan Terry, "Beginning Relational Data Modeling" 2nd Ed., 2005)

"A dimensional model contains a central fact table and a set of surrounding dimension tables, each corresponding to one of the components or dimensions of the fact table." (James Yao et al, "Development and Design Methodologies in DWM", 2008)

"A data model organized for the purpose of user understandability and high performance. In a relational database, a dimensional model is a star join schema characterized by a central fact table with a multi-part key. The components of this key are joined to a set of dimension tables, each defined by its own primary keys. In a multi-dimensional database, a dimensional model is the database cube." (Laura Reeves, "A Manager's Guide to Data Warehousing", 2009)

"A data model that represents data in a star-like structure of only one-to-many relationships, where each entity has either all relationships having the ‘one’ side or the ‘many’ side." (DAMA International, "The DAMA Dictionary of Data Management", 2011)

"A specialized type of physical data model particular to a retrieval-only database design, commonly used in Data Warehouses and data marts, where de-normalized fact tables are linked to dimension tables. Star schemas and snowflake schemas are examples of dimensional models." (DAMA International, "The DAMA Dictionary of Data Management", 2011)

💎SQL Reloaded: Ways of Looking at Data IV (Hierarchies)

Most of the hierarchies I met were having maximum 7 levels, where a level represents a parent-child relation, typically there is no need for higher hierarchies. In a RDBMS such relations are represented with self-referencing tables or self-referencing structures in case more than one table is used to represent the relation. The problem with such structures is that in order to get the whole hierarchy a join must be created for each relation, though as the number of levels could vary, such queries can become quite complex. Therefore RDBMS came with their own solutions in order to work with hierarchies

There are several types of problems specific to hierarchies: 1. Building the hierarchy behind of an item in the structure

2. Identifying the top-most parent of an item in the structure

3. Identifying the top-most parent of an item in the structure and building the hierarchy behind it – a combination of the previous mentioned problems

Building the hierarchy

SQL Server 2005 introduced the common table expressions (CTE), a powerful feature that allows building whole hierarchies in a simplistic manner. From a structural point of view a CTE has two elements – the anchor member defining the starting point of the query, respectively the recursive member that builds upon the anchor member. The starting point of the query in this case is the entity or entities for which the structure is built, and here there are 2 situations – we are looking for all topmost entities or for the entities matching a certain criteria, typically looking for one or more Product Numbers. The difference between the two scopes could lead to different approaches – whether to include the Product information in the CTE or to use the CTE only to built the hierarchical structure, and add only in the end the production information. Before things would become fuzzier let’s start with an example, here are the two approaches for defining the anchor member, the first query identifying the top-most assemblies, while the second searches for the components in the BOM that matches a criteria (e.g. the Product’s name starts with BK-R93R).

The anchor and recursive member are merged in the CTE with the help of a UNION therefore the number of attributes must be the same and their data type must be compatible. Here is the final query:

The anchor and recursive member are merged in the CTE with the help of a UNION therefore the number of attributes must be the same and their data type must be compatible. Here is the final query:

The TotalQty attribute has been introduced in order to show the cumulated quantity for each component, for example if the Assembly has the PerAssemblyQty 2 and the Component has the PerAssemblyQty 10 then the actual total quantity needed is 2*10.

The TotalQty attribute has been introduced in order to show the cumulated quantity for each component, for example if the Assembly has the PerAssemblyQty 2 and the Component has the PerAssemblyQty 10 then the actual total quantity needed is 2*10. The Path attribute has been introduced in order to sort the hierarchy lexically based on the sequence formed from the ComponentID, it could have been used instead the Product Number.

Identifying the top-most parent

The CTE can be used also for identifying the top-most parent of a given Product or set of Items, the second anchor query member could be used for this, while the recursive member would involve the reverse join between BOM and BillOfMaterials. Here is the final query:

Given the fact that the final CTE output includes the output from each iteration when only the data from the last iteration are needed, this might not be the best solution. I would expect that the simulation of recursion with the help of a temporary table would scale better in this case.

Given the fact that the final CTE output includes the output from each iteration when only the data from the last iteration are needed, this might not be the best solution. I would expect that the simulation of recursion with the help of a temporary table would scale better in this case.

Another interesting fact to note is that in this case more assemblies are found to match the criteria of top-most item, meaning that the BOMs are not partitioned – two BOMs could have common elements.

07 February 2010

🎡⌛SSIS: SQL Server to Oracle Data Export (Second Magic Class)

Like in the previous tutorial we’ll use the Production.Product table from AdventureWorks database coming with SQL Server.

Step 1: Create an Oracle User

If you already have an Oracle User created then you could skip this step, unless you want to create a User only for the current tutorial or for loading other AdventureWorks tables in Oracle. For this we will create first a permanent tablespace (e.g. AdventureWorks), an allocation of space in the database, then create the actual User (e.g. SQLServer) on the just created tablespace, action the will create a schema with the same name as the User, and finally grant the User ‘create session’, ‘create materialized view’ and ‘create table’ privileges.

Open your SQL Developer tool of choice and connect to the Oracle database, then type the following statements and run them one by one, do not forget to provide a strong-typed password in IDENTIFIED BY clause, then create a new connection using the just created User.

-- Create TableSpace CREATE BIGFILE TABLESPACE AdventureWorks DATAFILE 'AdventureWorks.dat' SIZE 20M AUTOEXTEND ON; -- Create User CREATE USER SQLServer IDENTIFIED BY <your_password> DEFAULT TABLESPACE AdventureWorks QUOTA 20M ON AdventureWorks; -- Grant privileges GRANT CREATE SESSION , CREATE MATERIALIZED VIEW , CREATE TABLE TO SQLServer;

From SQL Server Management Studio choose the database from which you want to export the data (e.g. AdventureWorks), right click on it and from the floating menu choose Tasks/Export Data

Step 3: Choose a Data Source

In ‘Choose a Data Source’ step select the ‘Data Source’, SQL Server Native Client 10.0 for exporting data from SQL Server, choose ‘Server name’ from the list of SQL Server available, select the Authentication mode and the Database (e. g. AdventureWorks), then proceed to the next step by clicking ‘Next’.

Step 4: Choose a Destination

In ‘Choose a Destination’ step select the Destination, in this case ‘Oracle Provider for OLE DB’ and then click on ‘Properties’ in order to provide the connectivity details, in ‘Data Link Properties’ dialog just opened, enter the Data Source (the SID of your Oracle database), the ‘User name’ (e.g. SQLServer), the ‘Password’ and check ‘Allow saving password’ checkbox, without this last step being not possible to connect to the Oracle database. Then test the connection by clicking the ‘Test Connection’, and if the ‘Test connection succeeded’ proceed to the next step.

Note:

Note:Excepting ‘Oracle Provider for OLE DB’ there are three other drivers that allows you to connect to an Oracle database: ‘Microsoft OLE DB Provider for Oracle’, ‘.Net Framework Data Provider for Oracle’, respectively ‘Oracle Data Provider for .Net’, each of them coming with their own downsizes and benefits.

Step 5: Specify Table Copy Or Query

In this step choose: ‘Copy data from one or more tables or views’ option.

Step 6: Select Source Tables and Views

In ‘Select Source Tables and Views’ step select the database objects (e.g. Production.vProducts) from which you’ll export the data. You could go with the provided Destination table, though if you are using the current settings Oracle table’s name will be “Product” (including quotes) and not Product as we’d expected. There are two options, go with the current settings and change table’s name and columns, they having also this problem, in Oracle, or create the table manually in Oracle. I prefer the second option because I could use the SQL script automatically generated by Oracle, for this with the just chosen table selected click on ‘Edit Mappings’ button that will bring the ‘Column Mappings’ dialog and click the ‘Edit SQL’ button, the query appearing as editable in ‘Create Table SQL Statement’ dialog.

CREATE TABLE sqlserver.Product ( ProductID INTEGER NOT NULL, Name NVARCHAR2(50) NOT NULL, ProductNumber NVARCHAR2(25) NOT NULL, MakeFlag NUMBER NOT NULL, FinishedGoodsFlag NUMBER NOT NULL, Color NVARCHAR2(15), SafetyStockLevel INTEGER NOT NULL, ReorderPoint INTEGER NOT NULL, StandardCost NUMBER NOT NULL, ListPrice NUMBER NOT NULL, ProductSize NVARCHAR2(5), SizeUnitMeasureCode NCHAR(3), WeightUnitMeasureCode NCHAR(3), Weight NUMBER, DaysToManufacture INTEGER NOT NULL, ProductLine NCHAR(2), Class NCHAR(2), Style NCHAR(2), ProductSubcategoryID INTEGER, ProductModelID INTEGER, SellStartDate TIMESTAMP NOT NULL, SellEndDate TIMESTAMP, DiscontinuedDate TIMESTAMP, ModifiedDate TIMESTAMP NOT NULL)

Because we have removed the rowguid column from the table created in Oracle, we’ll have to change the mappings, for this click on ‘Edit Mappings’ and in ‘Mapping’ list box from ‘Column Mappings’, in the Destination dropdown next to rowguid column select ‘

You could choose to export more than one table, though for this you’ll have to create manually each table in Oracle and then map the created tables in ‘Select Source Tables and Views’ step.

Step 7: Review Data Type Mapping

Step 8: Save and Run Package

In ‘Save and Run Package’ check the ‘Run immediately’, respectively the ‘Save SSIS Package’ and ‘File System’ option, then proceed to the next step by clicking ‘Next’.

Step 9: Save SSIS Package

In ‘Save SSIS Package’ step provide the intended Name (e.g. Export Products To Oracle) or Description of the Package, choose the location where the package will be saved, then proceed to the next step by clicking ‘Next’.

Step 10: Complete the Wizard

Step 11: Executing the Package

Step 12: Checking the Data

If the previous step completed successfully, then you can copy the following statement in Oracle SQL Developer and execute it, the table should be already populated.

SELECT * FROM sqlserver.Product

If you used other schema to connect to, then you’ll have to replace the above schema (e.g. SQLServer) with your schema.

🗄️Data Management: The Data-Driven Enterprise (Part I: Thoughts on a White Paper)

|

| Data Management Series |

The paper touches several important aspects related to Data Management, approaching concepts like “value of data”, “data quality”, “data integration”, “business involvement”, “data trust”, “relevant data”, “timely data” “virtualized access”, “compliant reporting”, “Business-IT collaboration”, highlighting the importance of having adequate processes, infrastructure and culture in order to bring more value for the business. I totally agree with the importance of these concepts though I think that there are many other aspects that need to be considered. With such concepts almost all vendors juggle, though what’s often missing is the knowledge/wisdom and method to put philosophies and technologies into use, to redesign an organization’s infrastructure and culture so it could bring the optimum benefit.

Since the appearance of data warehouses concepts, the efficient integration of the various data islands existing within and outside of an organization become a Holy Grail for IT vendors and organizations, though given the fast pace with which new technologies appear this hunt looks more like a Morgan le Fey in the desert. Informatica builds a strong case for data integration in general and for Informatica 9 in particular, their new infrastructure platform targeting to enable organizations to become data-driven by providing a centralized architecture for enforcing data policy and addressing issues like data timeliness, format, semantics, privacy and quality[3]. On the other side the grounds on which Informatica builds its launching strategy could be contra-argumented considering the grey zone they were placed in.

Quantifying Value of Data

How many of the organizations could say that they could quantify (easily) the real value of their data when there is no market value they could be benchmarked against? I would say that data have only a potential value that could increase only with its use, once you learned to explore the data, find patterns and new uses for the data, derive knowledge out of it and use it wisely in order to derive profit and a competitive advantage, and it might take years to arrive there.

There are costs that can be quantified, like the number of hours employees spent on maintaining the duplicate data, correcting the issues driven by bad data quality, or more general the costs related to waste, and there are costs that can’t be quantified so easily, like the costs associated with bad decisions or lost opportunities driven by missing data or inadequate reflection of reality. There is another aspect, even if organizations reach to quantify such costs, without having some transparency on how they arrived to the respective numbers it felts like somebody just took out some numbers from a magician’s hat. It would be great if the quantification of such costs is somehow standardized, though that’s difficult to do given the fact that each organization approaches Data Management from its own perspective and requirements.

From Data to Meaning

Reports are used only to aggregate, analyze and navigate data, while it’s in Users attribution to give adequate meaning to the data, and together with the data analyst to find the who, how, when, where, what, why, which and by what means, in a word to understand the factors that impact the business positively/negatively, the correlation between them and how they can be strengthened/mitigated in order to achieve better quality/outcomes.

People want nice charts and metrics that can give them a birds-eye view of the current state, though the aggregated data could easily hide the reality because of the quality of the data, quality of the reports itself, the degree to which they cover the reality. Part of the data-driven philosophy resume in understanding the data, and reacting to data. I met people who were ignoring the data, preferring to take wild guesses, sometimes they were right, other times they were wrong.

From Functionality to Usability

There are Users who once they have a tool they want to find all about its capabilities, play with the tool, find other uses and they could even come with nice to have features. There are also Users who don’t want to bother in getting the data by themselves, they just want the data timely and in the format they need them. The fact that Informatica allows Users to analyze the data by themselves it’s quite of a deal, though as I already stressed in a previous post, you can’t expect from a User to become a data expert overnight, there are even developers that have difficulties in handling complex data analysis requirements.

Allowing users to decide which logic to apply in their reports could prove to be a two edged sword, organizations risking ending up with multiple versions of the same story. It’s needed to align the various reports, bring users on the same page from the point of view of expectations and constraints. On the other side some Users prefer to prepare the data by themselves because they know the issues existing in the data or because they have more flexibility in making the data to look positive.

Trust, Relevance and Timeliness

An important part of Informatica’s strategy is based on data trust, relevancy and timeliness, three important but hard to quantify dimensions of Data Quality. Trust is often correlated with Users’ perception over the overall Data Quality, the degree to which the aggregated data presented in reports can be backed up with detailed data to support them, the visibility they have on the business rules and transformations used. If the Users can get a feeling of the data with click-through, drilldown or drill-through reports, if the business rules and transformations are documented, then most probably that data trust won’t be an issue anymore. Data relevancy and data timeliness are heavily requirement-dependent, for some Users being enough to work with one week old data while others need live data. In a greater or less degree, all data used by the business are relevant otherwise I don’t see why maintaining them.

Software Tools as Enablers

Sometimes being aware that there is a problem and doing something to fix it already brings an amount of value to the business, and this without investing in complex technologies but handling things methodologically and enforcing some management practices – identifying, assessing, addressing, monitoring and controlling issues. I bet this alone could bring a benefit for an organization, and everything starts just by recognizing that there is a problem and doing something to fix the root causes. On the other side software technologies could enable performing the various tasks more efficient and effective, with better quality, less resources, in less time and eventually with lowers costs. Now what’s the value of the saving based on addressing the issue and what’s the value of saving by using a software technology in particular?!

Software tools like Informatica are just enablers, they don’t guarantee results and don’t eliminate barriers unless people know how to use them and make most of it. For this are needed experts that know the business, the various software tools involved, and good experienced managers to bring such projects on the right track. When the objectives are not met or the final solution doesn’t satisfies all requirements, then people reach to develop alternative solutions, which I categorize as personal solutions – spreadsheets, MS Access applications, an organization ending up with such islands of duplicated data/logic. Often Users need to make use of such solutions in order to understand their data, and this is an area in which Informatica could easily gain adepts.

Business-IT collaboration

There is no news that the IT/IM and other functional departments don’t function as partners, IT initiatives not being adequately supported by the business, while in many IT technology-related initiatives driven by the business at corporate level the IT department is involved only as executor and has little to say in the decision of using one technology or another, many of such initiatives ignoring aspects specific to IT – usability of such a solution, integration with other solutions, nuances of internal architecture and infrastructure. Of course that phrases like “business struggling in working with IT” appear when IT and the business function as separate entities with a minimum of communication, when the various strategies are not aligned as they are supposed to.

No matter of the slogans and the concepts the vendors juggle with, I’m sorry, but I can’t believe that there is one tool that matches all requirements, that provides a fully integrated solution, that the tool itself is sufficient for eliminating the language and collaboration barriers between the business and IT!

Human Resources & Co.

Many organizations don’t have in-house the human resources needed for the various projects related to Data Management, therefore bringing consultants or outsourcing parts of the projects. A consultant needs time in order to understand the processes existing in an organization, organization’s particularities. Even if business analysts reach to augment the requirements in solid specifications, it’s difficult to cover all the aspects without having a deep knowledge about the architecture used, same as for consultants it’s difficult to put the pieces of the puzzle together especially when more of the pieces are missing. The consultants expect in general to have all the pieces of the puzzles, while the other sides expect consultants to identify the missing pieces.

When outsourcing tasks (e.g. data analysis) or data-related infrastructure (e.g. data warehouses, data marts) an organization risks to lose control over what’s happening, the communication issues being reflected in longer cycle times for issues’ resolution, making everything to become a challenge. There are many other issues related to outsourcing that maybe deserve to be addressed in detail.

The Lack of Vision, Policy and Strategy

An organization needs to have a vision, policy and strategy toward data quality in particular and Data Management in general, in order to plan, enforce and coordinate the overall effort toward quality. Their lack can have unpredictable impact on information systems and reporting infrastructure in particular and on the business as a whole, without it data quality initiatives can have local and narrow scope, without the expected effectiveness, resulting in rework and failure stories. The syntagma “it’s better to prevent than to cure” reliefs the best the philosophy on which Data Management should be centered.

Lack of Ownership

In the context of the lack of policy and strategy can be put also the lack of ownership, though given its importance it deserves a special attention. The syntagma “each employee is responsible for quality” applies to data quality too, each user and department need to take the ownership over the data they have to maintain, for their own or others’ departments scope, same as they have to take the ownership over the reports that make scope of their work, assure their quality and the afferent documentation, over the explicit and implicit islands of knowledge existing.

References:

[1] Informatica. (2009). The Data-Driven Enterprise. [Online] Available from: http://www.informatica.com/downloads/7060_data_driven_wp_web.pdf (Accessed: 6 February 2010).

[2] Herzler. (2006). Eight Aspects of the Data Driven Corporation – Exploring your Gap to Entitlement. [Online] Available from: http://www.hertzler.com/php/portfolio/white.paper.detail.php?article=31 (Accessed: 6 February 2010).

[3] Informatica. (2009). Informatica 9: Infrastructure Platform for the Data-Driven Enterprise, Speaker: Sohaib Abbasi, Chairman and CEO. [Online] Available from: http://www.informatica.com/9/thelibrary.html#page=page-5 (Accessed: 6 February 2010).

05 February 2010

🎡SSIS: Wizarding an SSIS Package (First Magic Class)

Step 1: Start SQL Server Import and Export Wizard

From SQL Server Management Studio choose the database from which you want to export the data (e.g. AdventureWorks), right click on it and from the floating menu choose Tasks/Export Data. This action will bring the ‘SQL Server Import and Export Wizard’ used, as its name indicates, for importing and exporting data on the fly from/to SQL Server or any other source.

Step 2: Choose a Data Source

Step 2: Choose a Data Source

In ‘Choose a Data Source’ step select the ‘Data Source’, SQL Server Native Client 10.0 for exporting data from SQL Server, choose ‘Server name’ from the list of SQL Server available, select the Authentication mode and the Database (e. g. AdventureWorks), then proceed to the next step by clicking ‘Next’.

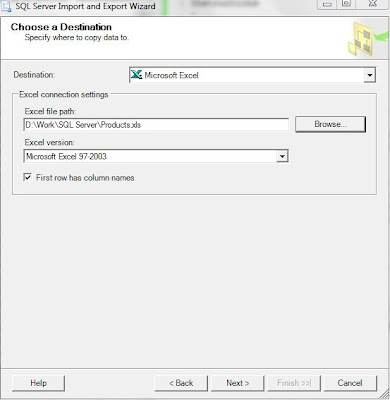

Step 3: Choose a Destination

Step 3: Choose a Destination

In ‘Choose a Destination’ step select the Destination, in this case ‘Microsoft Excel’, browse for the Excel file to which you want to export the data (e.g. Products.xls) and check ‘First row has column names’ in case you want to include the column names, then proceed to the next step by clicking ‘Next’.

Step 4: Specify Table Copy or Query

Step 4: Specify Table Copy or Query

In ‘Specify Table Copy or Query’ step there are two options you could choose: ‘Copy data from one or more tables or views’ or ‘Write a query to specify the data to transfer’ – their names are speaking for itself. The second option allows for more flexibility and you could just copy paste the query used for your expert. In this example just go with the first option and then proceed to the next step by clicking ‘Next’.

Step 5: Select Source Tables and Views

Step 5: Select Source Tables and Views

In ‘Select Source Tables and Views’ step select the database objects (e.g. Production.vProducts) from which you’ll export the data, then proceed to the next step by clicking ‘Next’. If the destination allows it, it’s possible to choose more than one database object.

Step 6: Review Data Type Mapping

Step 6: Review Data Type Mapping

In ‘Review Data Type Mapping’ typically you could go with the provided defaults thus you can proceed to the next step by clicking ‘Next’.

Step 7: Save and Run Package

Step 7: Save and Run Package

In ‘Save and Run Package’ step you could choose to ‘Run immediately’ the package and/or ‘Save SSIS Package’ to the ‘SQL Server’ or locally to the ‘File System’. Saving the package locally allows you to modify and rerun the package at a later date. For this example check the ‘Run immediately’, respectively the ‘Save SSIS Package’ and ‘File System’ option, then proceed to the next step by clicking ‘Next’.

Step 8: Save SSIS Package

Step 8: Save SSIS Package

In ‘Save SSIS Package’ step provide the intended Name (e.g. Export Products) or Description of the Package, choose the location where the package will be saved, then proceed to the next step by clicking ‘Next’.

Step 9: Complete the Wizard

Step 9: Complete the Wizard

The ‘Complete the Wizard’ step allows reviewing the choices made in the previous steps and eventually navigating to the previous steps in order and to the needful changes. Therefore once you proved the details proceed to the next step by clicking ‘Next’.

Step 10: Executing the Package

Step 10: Executing the Package

In ‘Executing the Package’ step the Package is run, the progress being shown as each step. Just close the Package once you reviewed the steps.

If the package run successfully you can go on and check the exported data, apply additional formatting, etc.

If the package run successfully you can go on and check the exported data, apply additional formatting, etc.

Step 11: Inspecting the Package

The package can be reopened and modified in Microsoft Visual Studio or SQL Server Business Intelligence Development Studio, for this just open one of the two environments and from the Main Menu select File/Open File, then browse for the location where the Package (e.g. Export Products.dtsx) was saved and open the file. Here is the package created by the Wizard:

As can be seen the package contains two tasks, the ‘Preparation SQL Task 1’ which creates the table (e.g. vProducts) in the Excel file, and ‘Data Flow Task 1’ which dumps the data in the created table. By double clicking the ‘Data Flow Task 1’ you could see its content:

- the ‘Source - vProducts’ OLEDB Data Source holding the connectivity information to the SQL Server and the list of Columns in scope.

- the ‘Data Conversion’ Transformation that allows converting the data between Source and Destination given the fact that Excel has different data types than SQL Server

- the ‘Destination - vProducts’ Excel Destination holding the connectivity information for the Excel file and the Mappings in place.

As can be seen the package contains two tasks, the ‘Preparation SQL Task 1’ which creates the table (e.g. vProducts) in the Excel file, and ‘Data Flow Task 1’ which dumps the data in the created table. By double clicking the ‘Data Flow Task 1’ you could see its content:

- the ‘Source - vProducts’ OLEDB Data Source holding the connectivity information to the SQL Server and the list of Columns in scope.

- the ‘Data Conversion’ Transformation that allows converting the data between Source and Destination given the fact that Excel has different data types than SQL Server

- the ‘Destination - vProducts’ Excel Destination holding the connectivity information for the Excel file and the Mappings in place.

You could explore the properties of each object in order to learn more about its attributes used and the values they took.

You could explore the properties of each object in order to learn more about its attributes used and the values they took.

Ok, so you’ve open the package, how do you run it again? For this is enough to double click on the locally saved Package (e.g. Export Products.dtsx), action that will bring the ‘Execute Package Utility’, and click on the ‘Execute’ button to run the Package.

If you haven’t done any changes to the Excel file to which you moved the data (e.g. Products.xls) and haven’t moved the file, then the package will fail when it attempts to run the ‘the ‘Preparation SQL Task 1’ task because a table with the expected name already exists in the file.

If you haven’t done any changes to the Excel file to which you moved the data (e.g. Products.xls) and haven’t moved the file, then the package will fail when it attempts to run the ‘the ‘Preparation SQL Task 1’ task because a table with the expected name already exists in the file.

In order to avoid this error to appear you’ll have to delete from the Excel file the sheet (e.g. vProducts) where the data were dumped and save the file. Now you could execute again the package and it should execute without additional issues.

In order to avoid this error to appear you’ll have to delete from the Excel file the sheet (e.g. vProducts) where the data were dumped and save the file. Now you could execute again the package and it should execute without additional issues.

If you’ve removed/deleted the Excel file, even if you get a warning that the Excel file is not available, the package will run in the end without problems. Instead of deleting the sheet manually you could use the File System Task to move the file to other location, though that’s a topic for another post.

🕋Data Warehousing: Star Schema (Definitions)

"A relational database structure in which data is maintained in a single fact table at the center of the schema with additional dimension data stored in dimension tables. Each dimension table is directly related to the fact table by a key column." (Microsoft Corporation, "SQL Server 7.0 System Administration Training Kit", 1999)

"A star schema is a dimensional data model implemented on a relational database." (Claudia Imhoff et al, "Mastering Data Warehouse Design", 2003)

"A star schema is a set of tables comprised of a single, central fact table surrounded by dimension tables. Each dimension is represented by a single dimension table. Star schemas implement dimensional data structures with denormalized dimensions. Snowflake schemas are an alternative to a star schema design." (Sharon Allen & Evan Terry, "Beginning Relational Data Modeling" 2nd Ed., 2005)

"A single fact table surrounded by a single hierarchical layer of dimensional tables, in a data warehouse database." (Gavin Powell, "Beginning Database Design", 2006)

"A single fact table which joins to many dimension tables, each of which is a single denormalized dimension table." (Reed Jacobsen & Stacia Misner, "Microsoft SQL Server 2005 Analysis Services Step by Step", 2006)

"The instantiation of a dimensional model in a relational database. A star schema consists of a fact table and the dimension tables that it references. The fact table contains facts and foreign keys; the dimension tables contain dimensional attributes by which the facts will be filtered, rolled up, or grouped." (Christopher Adamson, "Mastering Data Warehouse Aggregates", 2006)

"A single fact table which joins to many dimension tables, each of which is a single denormalized dimension table." (Reed Jacobsen & Stacia Misner, "Microsoft SQL Server 2005 Analysis Services Step by Step", 2006)

"The implementation of a dimensional model in a relational database. The tables are organized around a single central fact table possessing a multi-part key, and each surrounding dimension table has its own primary key." (Laura Reeves, "A Manager's Guide to Data Warehousing", 2009)

"The basic form of data organization for a data warehouse, consisting of a single large fact table and many smaller dimension tables." (Toby J Teorey, ", Database Modeling and Design 4th Ed", 2010)

"The arrangement of the collection of fact and dimension tables in the dimensional data model, resembling a star formation, with the fact table placed in the middle surrounded by the dimension tables. Each dimension table is in a one-to-many relationship with the fact table." (Paulraj Ponniah, "Data Warehousing Fundamentals for IT Professionals", 2010)

"The basic form of data organization for a data warehouse, consisting of a single large fact table and many smaller dimension tables." (Toby J Teorey, ", Database Modeling and Design" 4th Ed., 2010)

"A common form of a dimensional data model, where a fact table is directly linked by foreign keys to several dimension tables." (Craig S Mullins, "Database Administration", 2012)

"A relational database structure in which data is maintained in a single fact table at the center of the schema with additional dimension data stored in dimension tables. Each dimension table is directly related to and usually joined to the fact table by a key column." (Microsoft, "SQL Server 2012 Glossary", 2012)

"A relational schema whose design represents a dimensional data model. The star schema consists of one or more fact tables and one or more dimension tables that are related through foreign keys." (Oracle, "Database SQL Tuning Guide Glossary", 2013)

"A type of relational database schema that is composed of a set of tables comprising a single, central fact table surrounded by dimension tables. See also dimension table, star join." (Sybase, "Open Server Server-Library/C Reference Manual", 2019)

About Me

- Adrian

- Koeln, NRW, Germany

- IT Professional with more than 25 years experience in IT in the area of full life-cycle of Web/Desktop/Database Applications Development, Software Engineering, Consultancy, Data Management, Data Quality, Data Migrations, Reporting, ERP implementations & support, Team/Project/IT Management, etc.