|

| Data Migrations Series |

Introduction

ERP implementations are one of the most complex projects to plan as they often imply changes/transformations at different levels (e.g. strategic, processes, data, cultural, technological), span over one or more years, involve many resources that need to be efficiently managed, and often come with important costs for the organization.

One way of handling complexity is to ignore the nonessential in planning by focusing on the important activities/phases, following to go deeper as the project progresses. Another way to handle complexity is to split it at manageable parts – identifying and grouping together components. For example, Data Migration (DM) and Data Quality (DQ) are managed as subprojects, with their own planning. The two strategies can be combined to increase the effect.

Planning a DM cannot be done without looking at the timelines of the ERP implementation and considering the various interfaces to the DQ, however in this post I will focus only on the first two.

The Context

In the context of an ERP implementation there are three main approaches to the planning of a DM – pushing the activities toward the end of the implementation, pushing most activities toward the beginning of the implementation, or splitting the various activities over the whole timeline of the ERP implementation. Borrowing a term from statistics, we can talk about a left-skewed plan, a right-skewed plan, respectively a uniform-distributed plan.

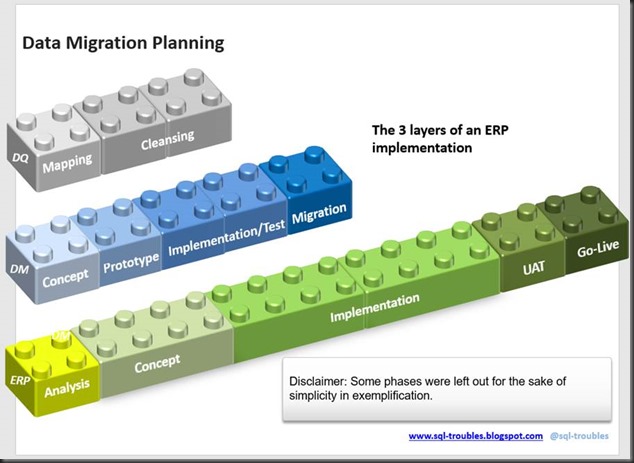

For exemplification I will use a set of Lego pieces grouped together in 3 rows and representing the main phases of an ERP implementation, DM, respectively DQ:

The Lego pieces are a good tool for representing the phases, even they can be mischievous because there’s often no clear delimitation between certain phases as they overlap or repeat over several iterations, and bricks’ length doesn’t necessarily represent the actual duration of the phases. In addition, the phases are oversimplified in order not to clutter the diagrams. The detailed phases will be considered in further posts. The color changes gradually as the activities get closer toward the end.

Left-Skewed Planning

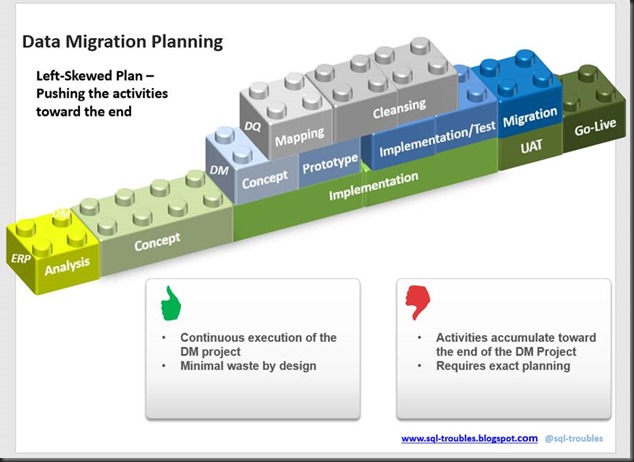

One way to plan a DM is backwards from the Go-Live, the DM activities flowing continuously backwards (the DM bricks are arranged from the end over the implementation bricks) and thus accumulating toward the end of the ERP implementation. This approach is natural considering that the requirements stabilize in the second half of the implementation, the code freeze occurring toward the end. Stabilizing means that the most important code changes were performed, and only minor changes need to be performed, typically bug fixing, refactoring or last-minute changes.

The DM starts with a set of requirements in what concerns the business processes, the data and configuration. Each change to these requirements equate with overwork that need to be performed. Typically, this happens only at entity level, however there can be changes that have impact for the whole or important parts of the migration. Additionally, after each set of changes another dry-run needs to be performed in order test the changes. Therefore, to minimize the volume of rework a DM needs a stable environment – in other words a stabile data model, configuration and requirements.

Thus, the conceptualizing of the migration including the prototyping can start in the first half of the implementation or, for long-running projects, even in the second half of the implementation. Performing the DM without interruptions assures an optimal use and planning of the resources – the resources are continuously working on the project, they are focused toward the end.

On the other side, the accumulation of the activities toward the end can easily lead to problems in what concerns resources’ availability. This type of approach needs a good planning, otherwise the project runs into the risk of having the Go-Live delayed until the stability of the DM is assured, or of going Live with data that don’t have the expected quality. These risks can be alleviated by adding a puffer to DM’s timeline, or by considering one of the other two approaches.

This approach minimizes the various types of waste associated with software projects, and thus the costs associated with waste.

Right-Skewed Planning

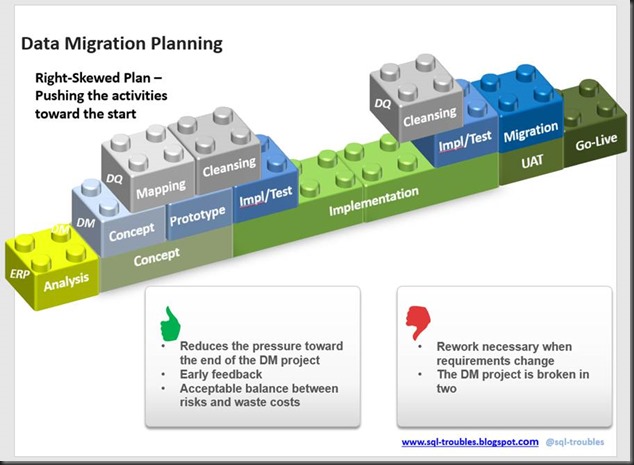

To release the pressure existing toward the end of the project, some of the DM activities can be performed toward the beginning of the project – the conceptualization and prototyping, as well the data mapping. One can in theory build also an important part of the DM for the standard functionality, following to address the changes in data model, processes and configuration in subsequent iterations. This could involve a higher volume of rework and more dry-runs, however this depends on the complexity and number of the changes. If a small number of customizations are expected, then this approach may be the best approach. Even in the case of many customization this approach might be something to consider, however the DM costs increase with the number of customization made, and in certain contexts the increase can be exponential.

This approach pushes some of the costs toward the beginning of the implementation, and this can have positive as well negative aspects. For example, it is well-known that ERP implementations involve cost overruns. With this approach the DM costs are assured toward the beginning and one can better get a hold of the budget, at least in theory. As negative aspect could be considered the cases in which an ERP implementation is stopped toward the middle of the project, the incurred costs being thus higher. In the end the main cost-driver are the volume of customizations.

Breaking a DM in two can have several other negative aspects. The data cleaning needs to be broken eventually as well, most probably in the second phase more data enrichment activities need to be considered.

The resources that worked on the first phase might not be available for the second phase. An adequate knowledge transfer might be hard to make, so the second team might need good documentation or time to understand the solution. This can lead to other type of behavior, e.g. rewriting unnecessarily the code, the push for a redesign, and so on.

As the environment stabilizes much later, there is the risk that an important part of the migration need to be reworked/redesigned. In extreme cases might be needed to start from zero. The chances for this to happen are small, though such a case can occur. Probably some of the code, transformation can be reused, though this depends from case to case. Without knowing implementation’s details it’s difficult to estimate the chances for something like this to happen. Sometimes is enough to invalidate a premise considered in design phase. Usually the interplay between several new requirements lead to redesign.

Uniform-distributed Plan

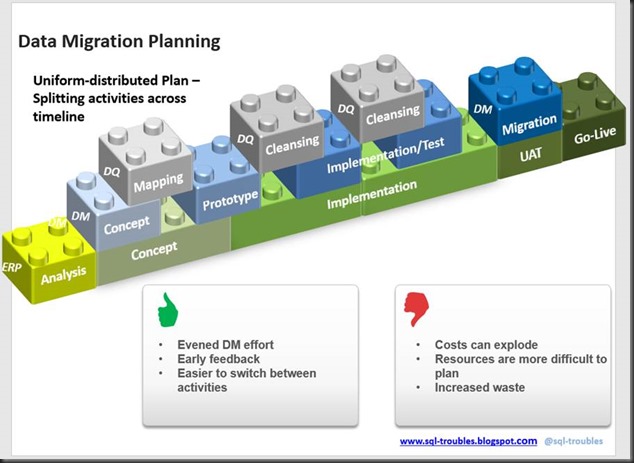

To alleviate the risks from the first two approaches, some of the activities could be uniformly distributed over the whole duration of the ERP implementation. This approach works well when same resources from the vendor side are involved in activities from all the three layers, the nature of the tasks allowing them to work continuously in the project. For example, the consultants working on the DM concept are helping on the mapping of the attributes, as well on data cleansing. When the work on multiple activities isn’t possible then the vendor(s) more likely will have problems in assigning resources to the project. Either the same resources will be assigned for big parts from the projects, incurring thus higher costs, or the resources will be replaced by others, additional learning being involved. In either case the costs are higher.

One of the main dangers of this approach is that certain activities will expand taking the time available, incurring thus higher costs. When the Implementation time is much higher than DM’s duration, the distance between DM’s phases can increase dramatically, being almost impossible to manage resources adequately. Keeping the metaphor of the Lego pieces, it will be thus also more difficult to build a structure on which an edifice can be built. With proper planning and adequate use of resources and knowledge the empty spaces can be incorporated in the structure for project’s advantage.

Even if this approach attempts to even the DM effort over the whole duration of the ERP implementation, performing the activities too early, before requirements stabilized can have an adverse effect.

Personal Approach

Looking back at the projects I worked on, I think I used a hybrid between the 3 approaches. The DM was planed backwards from the Go Live, however the first draft of the DM concept and prototyping was performed at the beginning of the implementation. This assured that the technical solution was working. Being involved in the creation of the data mappings as well in data cleansing, the jumps between activities allowed me to smoothly switch between the various activities, however toward the end of the project this became a bottleneck, the activities being harder to synchronize, and the volume of work could be addressed at that time only with overtime.

With a few exceptions I worked mainly alone on the technical activities, being responsible for the data mappings, design, prototyping, implementation, testing, protocolling, and execution of the DM. I think that more resources would have removed some of the burden but made the planning more complicated and the synchronization even harder. Probably a team of 2-3 people that could cover these activities would provide the optimum balance between costs, effort and quality.

Conclusion

I suppose there is no best solution that will work for all. The three approaches are more an attempt to highlight some of the extreme usages of planning. In an ERP implementation there are so many factors, so many chances for a decision to be an opportunity or a threat. My advice – ponder the various aspects/constraints, choose an approach, and adjust it as the project advances.

Previous Post <<||>> Next Post